Estimating Cost of Query

Our PIM API is built to let you do it all, so how do we protect our server from a possible influx of deeply-nested graphQL requests?

One risk of making an API available to the public is that someone will (perhaps accidentally) overuse it, costing us execution time or even denial of service for other users.

One way to reduce this risk is to limit the rate at which a user can use our API. Typically this is calculated in the number of requests allowed per time unit. But since we have built our API using GraphQL, the API user is the one determining each request’s complexity.

This makes calculating a fair limit even more of a guessing game. Over-limit a very simple query, and you’re being needlessly restrictive; under-limit a very complex query, and your defenses are too weak.

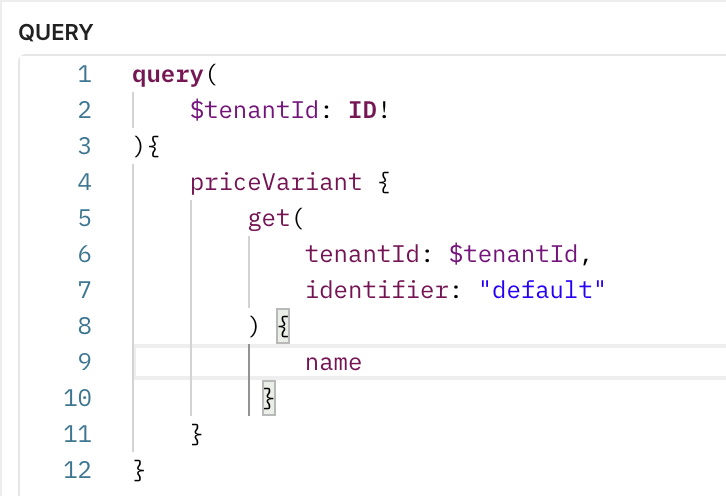

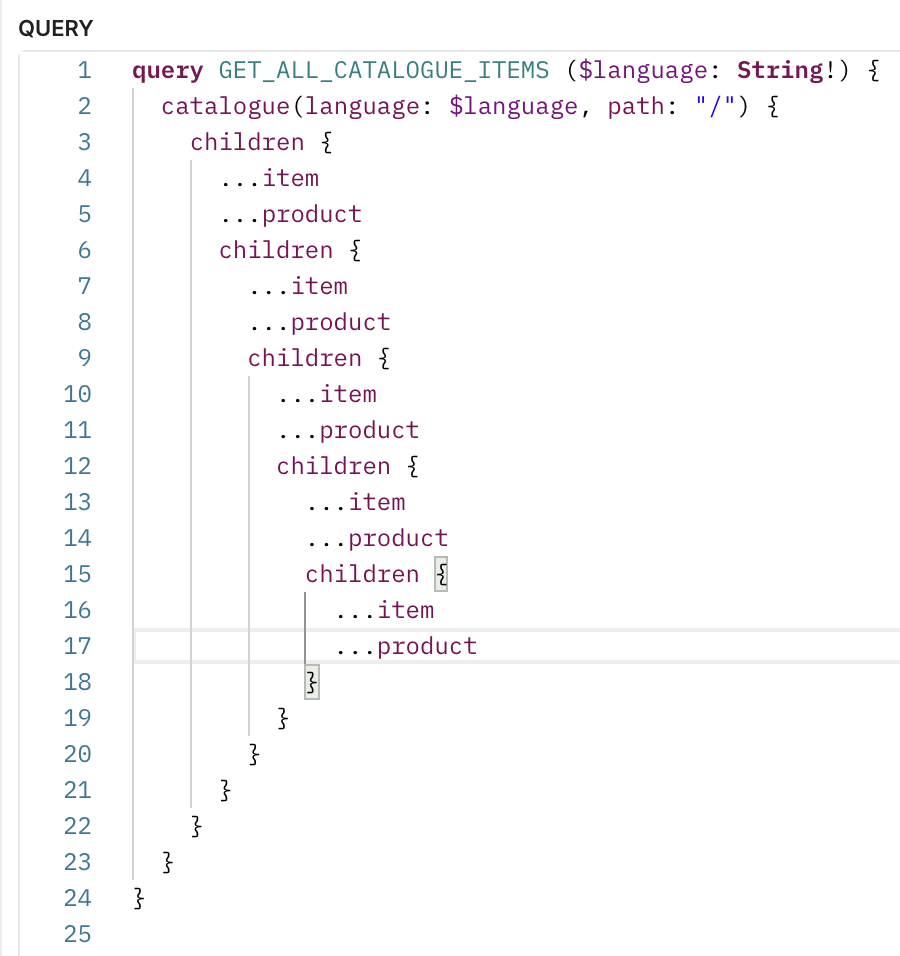

Should the query above be rate limited to the same degree as the query below?

To account for vastly different server efforts among GraphQL queries, we want to calculate a cost score based on what the query is asking of the server. If that cost is deemed too expensive, we also deny that query before it gets executed.

This is especially useful for some of our catalog queries since we give users the flexibility to nest and relate products, prices, topics, and images as they see fit and the ability to query their connected objects in a single request. This is great for the user but potentially risky for our backend services. A cost score based on the query itself should be higher the more levels of nested items the user requests.

Finally, a note on how we chose to build this: although NestJS has great integration with the graphql-query-complexity library, we don’t use a code-first approach exclusively throughout our apps.

Still, our solution will look much like the NestJS example that uses an ApolloServerPlugin instance to execute the cost calculation at the didResolveOperation request lifecycle event.

Progress and Lessons Learned So Far

Technical lessons learned from adding a GraphQL cost analyzer plugin to our Apollo service

Let’s review some of the challenges we encountered by adding the GraphQL-cost-analysis library to our Apollo backend first.

The example docs for this library show adding its exported `costAnalysis` function to your GraphQL validationRules. One key point here is the inclusion of variables through an available request or graphQLParams object. Due to the way we have set up our GraphQL apps across NestJS and non-NestJS frameworks, this object is not available to us.

We, therefore, needed to do some of the work that graphql-cost-analysis is doing. Instead of a ValidationRule, we chose to use an ApolloServerPlugin because it too would run before executing the GraphQL resolver. Using a new plugin instance, we pass the request context and GraphQL schema to a new QueryCostAnalyzerService that will be responsible for working with graphql-cost-analysis.

import { QueryCostAnalyzerService } from '../query-cost-analyzer.service'

import {

ApolloServerPlugin,

GraphQLRequestListener,

GraphQLRequestContext

} from 'apollo-server-plugin-base'

export class QueryCostAnalyzerPlugin implements ApolloServerPlugin {

queryCostAnalyzerService: QueryCostAnalyzerService

savedSchema

constructor() {

this.queryCostAnalyzerService = new QueryCostAnalyzerService()

}

// we will need the schema for graphQL validation; grab it here

async serverWillStart({ schema }) {

this.savedSchema = schema

}

public async requestDidStart(

requestContext: GraphQLRequestContext,

): Promise<GraphQLRequestListener> {

const { queryCostAnalyzerService, savedSchema } = this

return {

didResolveOperation: async () => {

// pass a GraphQLRequestContext and the schema to our own QueryCostAnalyzerService

queryCostAnalyzerService.getCost({

requestContext,

schema: savedSchema

})

},

}

}

}The QueryCostAnalyzerPlugin gets passed to our Apollo Federation config’s plugins array.

The new QueryCostAnalyzerService then builds a ValidationContext from the requestContext and schema, and passes the validationContext to our costAnalyzer.

Now that our cost analyzer is generating costs for each query, we can start fine-tuning the cost of different fields. We’ve set a defaultCost value of 1 in the gist above. This is a useful start but does not differentiate between a query that asks for the first 5 results and a query that asks for the first 500 results, for example.

We can scale the cost according to the size of the requested array response by defining multipliers on the graphql-cost-analysis costMap. We set these up on `first` and `last` GraphQL arguments, so a request for the first 10 documents returned by a search will have ten times the cost of requesting the first 1 document returned from a search.

But, we’ve also told GraphQL in our schema definition to use a default `first` value. Now, if the user asks for the last 5 documents, the calculated score will be multiplied by 5, and that default `first` value! This isn’t fair to the user at all, so we have to be sure to ignore that default `first` value if the user is providing a `last` value.

Moving Forward

Fine-tuning the cost values on each field and catching issues like the multiplier one is an iterative process requiring some monitoring. We have to be able to see what cost values are being calculated for different queries and adjust values accordingly.

This means there will be a follow-up to this post in which I’ll show how we set up monitoring of these values, what that looks like, and how the query budget works for us.

If you have any questions in regards to this, hit us/me up via Twitter or join Crystallize Slack community.