Frontend Performance Measuring, KPIs, and Monitoring

Frontend performance has a huge impact on user experience, business goals, and SEO rankings. A fast-loading page makes it easier for visitors to engage with your content (and more likely to stick around and convert).

Conversely, a slow site can tank your conversion rates and search visibility. As we head into 2026, web developers are more performance-conscious than ever. Modern frameworks such as Next.js, Astro, Remix, Svelte, and others are embracing new paradigms – from hybrid rendering with React Server Components to partial hydration – all in the quest for better speed and interactivity.

But how do you actually measure performance? And why do you need to be data-driven, continuously monitoring and optimizing for speed?

Let’s explore that. Let’s go millisecond hunting! 🚀

Determine Your Performance KPIs

First, identify your frontend performance KPIs (Key Performance Indicators) – the metrics that will define “fast” for your team. These are the benchmarks you’ll track and improve. Without clear KPIs, you’re flying blind or chasing gut feelings (and you might not be wrong, just not backed by data). By setting the right KPIs, everyone from developers to executives speaks the same language about performance.

We highly recommend choosing industry-standard metrics as your KPIs. This lets you leverage a wide array of tools and aligns your goals with what impacts SEO (search engines like Google do factor speed into rankings). In practice, this means avoiding vanity metrics and focusing on actionable ones that reflect real user experience. A good KPI should tell you what to fix, do something you can realistically improve, and matter to users (not just impress on a dashboard).

At the moment, the best set of performance metrics to adopt as KPIs are the Core Web Vitals. These are common across the industry and backed by Google. Although they do not encompass ALL the available frontend performance metrics, they include the most important ones with the biggest impact on user experience that can be reliably measured.

Core Web Vitals

In May 2020, Google introduced Core Web Vitals as a standardized way to quantify user experience quality on the web. These metrics have since become the de facto gold standard for frontend performance measurement. They are integrated into tools like Lighthouse, PageSpeed Insights, Search Console, .etc, and Google uses them as part of its page experience ranking signals.

As of now, Core Web Vitals consist of three key metrics:

- Largest Contentful Paint (LCP) – measures loading performance (when the main content is visible)

- Interaction to Next Paint (INP) – measures responsiveness to user interactions (recently replaced First Input Delay)

- Cumulative Layout Shift (CLS) – measures visual stability (how much the layout moves around)

These three metrics cover the most important aspects of loading speed, interactivity, and stability. We’ll break down each one below as our primary performance KPIs. But first…

Field Data vs. Lab Data

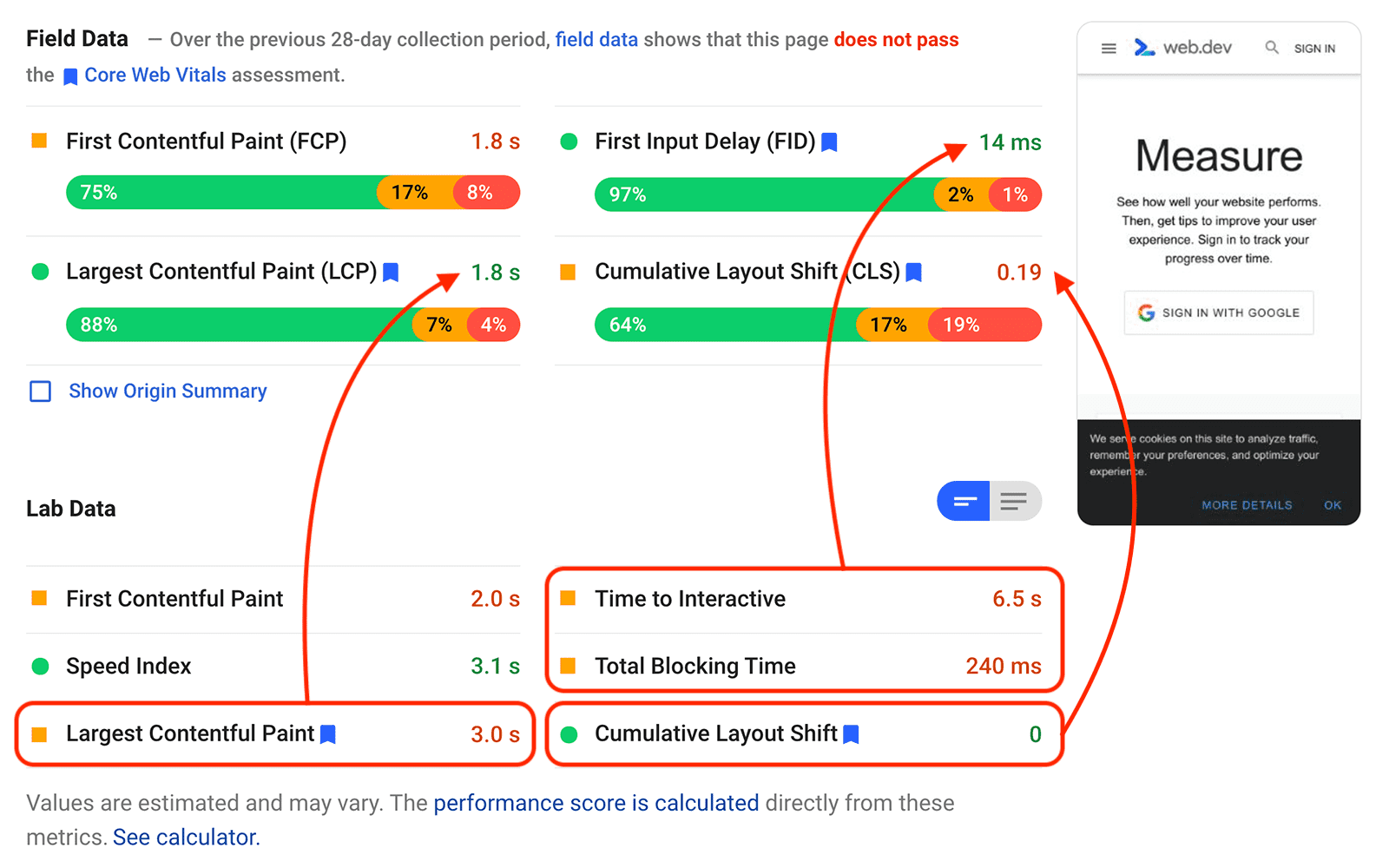

When you run a performance test (e.g., PageSpeed Insights), you may notice it shows “Field Data” and “Lab Data.”

Field Data refers to metrics collected from actual users in the wild (for Chrome users, this comes from the Chrome User Experience Report). This is what real users experienced on your site, and it’s what Google cares about for rankings. If your real-user LCP or INP is poor, that’s a genuine problem – and it will reflect in SEO as well. Field data accounts for variability in device types (phones vs. laptops, etc.) and network conditions (WiFi, 5G, a busy café network, etc.). Not everyone has a high-end device or fast network, so field metrics capture the actual range of performance your users get.

💡Pro tip: Differences in devices and networks can lead to vastly different results – a mid-range phone on 3G will paint a very different picture than a desktop on fiber. Always consider your audience’s context.

Image source: web.dev’s Why Lab and Field Data Can Be Different article.

Lab Data, on the other hand, is performance data gathered in a controlled test environment – for example, a single run on a simulated mobile device and throttled network in Lighthouse. Lab tests are fantastic for debugging and testing optimizations (since you can consistently re-run them to see if a code change improved things). However, lab data is not what your average user sees, and Google’s ranking algorithm does not use lab data at all. It’s the field data that ultimately matters for UX and SEO.

In short: use lab data to experiment and optimize, but judge your success by the field data.

But It’s Not Just About Core Web Vitals

Core Web Vitals are important, but remember they’re part of a bigger picture. Google’s Page Experience signals include these vitals, among other factors (mobile-friendliness, HTTPS, pop-ups). And when it comes to SEO, not all performance reports are created equal – the only place that truly determines your CWV status for search rankings is the Google Search Console report (which pulls from the official Chrome UX field data).

Finally, performance isn’t the only thing that matters. You could have a super-fast site with mediocre content, and Google will still rank the site with the better content higher in many cases. Google itself has stated that relevant content can outweigh a poor page experience in rankings. Users ultimately seek the best content, and if your page is the most relevant answer, Google won’t ignore it just because it’s a bit slow. That said, between two equally relevant pages, the faster one will likely win (and a fast site gives you a competitive edge in user satisfaction, conversion, and many other ways).

In short, don’t neglect content quality while optimizing performance – invest in both for the best results.

With those caveats in mind, let’s dive into the core metrics themselves and see why each KPI is important, what good looks like, and how they affect users and businesses.

Both our CWV page (from the standpoint of metrics) and frontend performance checklist (from a general improvement standpoint) hold great suggestions on optimizing all KPI metrics mentioned here.

Performance KPI 1: Time to First Byte (TTFB)

Time to First Byte (TTFB) is an indicator of how quickly your server responds to a request i.e. delay between a user requesting a page and the first bit of content arriving in their browser. That initial server response sets the tone: if TTFB is slow, everything else (images, scripts, etc.) is pushed out later as well.

From a user’s perspective, a long TTFB means the site hasn’t even started loading – it feels unresponsive or down. Imagine clicking a link and nothing happens for a second or two; many users will start to bounce or get frustrated. From a business perspective, TTFB is often a clue about backend efficiency. A sluggish TTFB might indicate server congestion, inefficient server-side code, or network latency issues. It can happen if your server is underpowered, your database is slow, or you’re serving users from an origin server that’s far away from them.

What’s a good TTFB? Google recommends TTFB < 200 ms as a benchmark for fast websites (source). With a well-optimized backend and CDN strategy, TTFB in the low hundreds of milliseconds is achievable. If your audience is global, using a Content Delivery Network (CDN) or edge servers can help keep TTFB low by serving content from a location closer to the user.

Why does TTFB matter for your business? User impression and bottleneck avoidance. First, users quickly notice when a site is slow to start responding – it’s a bad first impression and increases the chance they abandon the visit. Second, TTFB is essentially the foundation of the entire page load; meaning a poor TTFB drags all other metrics down (e.g., your LCP can’t be good if your server takes 2 seconds just to send the first byte).

Optimizing TTFB often involves backend improvements (database queries, caching, efficient code) and infrastructure tweaks (upgrading hosting, enabling keep-alive, using CDNs). It’s an important KPI for both developers and the business to monitor: it bridges the gap between backend performance and frontend experience.

💡Pro tip: if you see “Reduce initial server response time” in PageSpeed Insights, that’s essentially telling you your TTFB is too high (typically PSI flags this if TTFB > ~600 ms). It’s a sign to investigate server-side performance.

Performance KPI 2: Largest Contentful Paint (LCP)

LCP is the king of user-first metrics. Largest Contentful Paint (LCP) measures loading speed in terms of when the main content of the page is displayed. More specifically, it tracks the render time of the largest content element in the viewport – often an image, headline, or block of text – from when the page started loading.

In essence, this metric directly correlates with the user’s perception of speed. If LCP is slow, the site feels slow because the thing the user cares about (e.g., the article text, the product image, the hero section) is not yet visible.

What’s a good LCP? Google’s benchmark for “good” is within 2.5 seconds of when the page first starts loading. Above 4s is considered poor. Note that these are measured at the 75th percentile of users – meaning, 75% of visits should hit that target (because there will always be some slower cases). Hitting good LCP consistently can be challenging on media-rich sites, but it’s a worthy goal because it greatly improves user retention.

Business perspective. LCP is the heaviest-weighted factor (25%) in Google’s Lighthouse performance score (check the calculator) and one of the most critical metrics in Core Web Vitals. For an e-commerce site, this could mean the product image and price load quickly – the user is more likely to stay and maybe add it to the cart. For a content site, the article text appears quickly – the user is more likely to read it instead of bouncing back to search results. There’s a clear link between faster LCP and higher conversions or lower bounce rates (faster sites keep users’ attention).

How do you improve LCP? Common culprits of a slow LCP include large, unoptimized images, render-blocking resources (like heavy CSS or JS that delays content rendering), or slow server responses (back to TTFB!). Optimizing LCP usually means optimizing and compressing images, using modern image formats, ensuring text is quickly renderable (loading critical CSS early and deferring non-critical JS), leveraging browser caching, and prioritizing the loading of above-the-fold content. If you make significant improvements to these areas, you should see LCP come down.

Performance KPI 3: Cumulative Layout Shift (CLS)

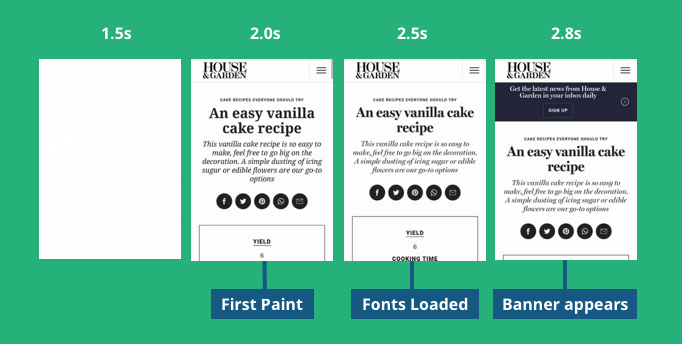

Cumulative Layout Shift (CLS) measures the visual stability of your page. It quantifies how much the layout moves around during loading (or even later). You’ve likely experienced a page that loads and suddenly content shifts up or down, causing you to lose your place or click the wrong thing. Those jarring movements are what CLS captures; it tracks how much stuff jumps around on screen.

Example image source: Debug Bears Measure And Optimize Cumulative Layout Shift (CLS) article.;

A stable page (no unexpected shifts) feels smooth and professional, while a page that jerks around feels clunky and frustrating. This is especially important on mobile devices, where screen space is limited and even small shifts can cause big mis-taps.

What’s a good CLS? A CLS of 0 means zero movement (pixel-perfect stability). Avoid anything above 0.25 (which is poor). A few things annoy users more than a page that keeps moving as they try to use it. You go to tap a button and – oops – it moves and you end up clicking something else. That’s the classic example, and CLS is literally designed to catch that. In practice, you don’t always need zero, but being under 0.1 ensures that any shifts that do happen are minor and unlikely to disrupt the user.

From the business/user perspective, CLS is about preventing frustration. In e-commerce, we’ve seen cases where a “Buy Now” button shifts and the user ends up clicking something else – that’s potentially a lost sale (or at least a moment of confusion). High CLS can erode trust; the site feels glitchy. On the flip side, a stable interface conveys polish and reliability.

Google also cares about CLS – it was initially weighted at 15% of the Lighthouse performance score and was recently increased to 25% in the Lighthouse 10 update (source). This increase in weight underscores how important visual stability is considered in overall performance UX.

Common causes of layout shifts include images or videos without specified dimensions, ads or embeds that dynamically push content, custom fonts causing text to re-layout when they load, etc. The fixes are usually straightforward, like always including width and height attributes (or CSS aspect-ratio boxes) for media so the browser allocates space in advance, etc., and many, if not all, are shared in our Core Web Vitals and frontend checklist pages. With a bit of planning, you can get your CLS close to zero.

Performance KPI 4: Interaction to Next Paint (INP)

Interaction to Next Paint (INP) is the newest Core Web Vital on the block, and it’s all about measuring responsiveness. Specifically, INP looks at how quickly your page responds to user interactions – not just the first interaction (like the old First Input Delay (FID) metric did), but any interaction a user makes throughout their visit and ultimately reports a single value that represents (approximately) the worst or slowest interaction experience for the user.

LCP measures load time, CLS measures static layout, and INP extends into the interactive phase of the page’s life. Chrome usage data shows users spend most of their time on a page after it loads (scrolling, clicking, navigating, etc.), so it makes sense to measure performance during that phase too. INP is particularly important for JavaScript-heavy applications where user actions might trigger lots of processing.

What’s a good INP? 200 ms is good, more than 500 ms is poor. So you want that worst-case interaction (at the 75th percentile of users) to be under 0.2 seconds ideally. That threshold is a rough guideline for what feels “instant” to a user in terms of interface response.

From the user’s perspective, INP is essentially tracking how snappy your site feels when they actually use it. Does the UI react quickly when I do something, every time? Or are there moments where it hangs or stutters? A poor INP can directly lead to frustration, errors (double submissions), or users abandoning a process.

For businesses, INP is critical for web apps, interactive forms, and checkouts – any scenario where the user isn’t just consuming content but interacting with it. For example, a slow-reacting add-to-cart button might cause a customer to tap it multiple times or give up if there's no feedback.

JavaScript execution, layout/recalculate style, etc., long tasks on the main thread that block the browser from updating the UI promptly are usual blockers. The key to improving INP is to break up long tasks, optimize JavaScript, and use techniques like asynchronous processing or web workers to keep the main thread free.

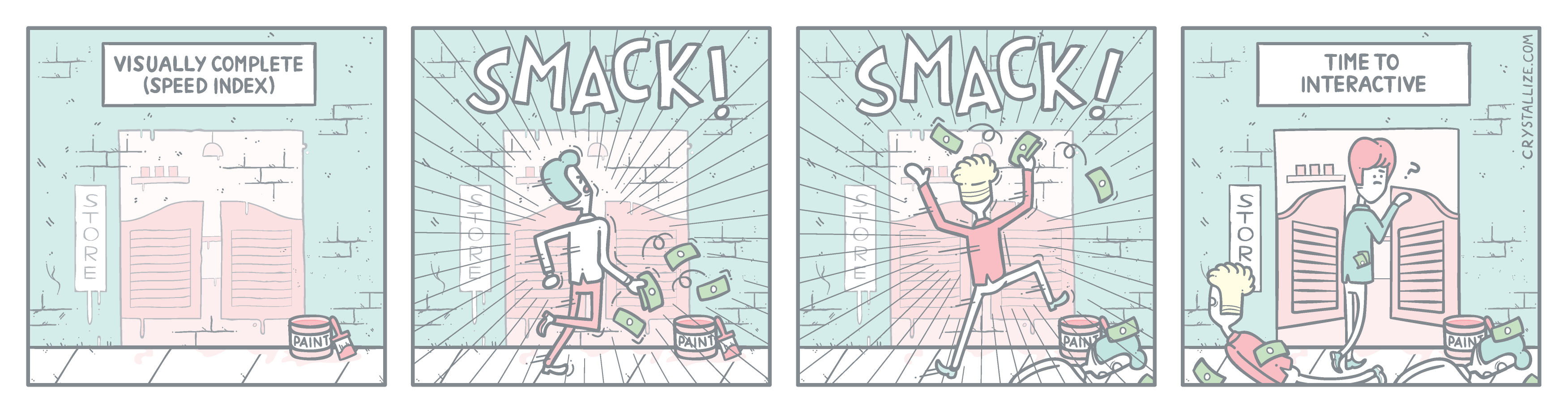

Total Blocking Time (TBT)

While talking about optimizing INP, we have to mention Total Blocking Time (TBT). TBT is a lab metric (part of Lighthouse’s report) that measures how long the page was blocked by scripts during its load phase, i.e., TBT is the sum of all those chunks of time where the main thread was busy for long stretches (tasks taking longer than 50 ms).

TBT is NOT one of the Core Web Vitals, but it strongly correlates with real-world responsiveness so much that Lighthouse gives TBT a hefty weight (30% of the performance score). If your TBT in a lab test is high, it’s a sign you have a lot of main-thread blockage, which likely means users could experience laggy interactions (high INP), especially during page load or intense moments.

A TBT of 0 ms means your page didn’t have any long tasks blocking the main thread; the user could interact at any time without delay. If, say, your TBT is 1000 ms, that indicates the user potentially faced a full second during load where the app would ignore input – not great if they tried to, for example, click a nav link quickly.

For user experience, high TBT often manifests as the page being unresponsive at first – e.g., the page loads and appears interactive, but taps and clicks are unresponsive for a moment because scripts are still processing. Lowering TBT (by deferring non-critical JS, splitting bundles, and using optimization techniques shared in our performance checklist) will also help your INP, as the less time the browser is tied up, the faster it can respond to user actions.

TBT measures the potential delay (in a lab scenario), and INP measures the actual delay users experience.

Other Performance Metrics

We’ve focused on the major KPIs we recommend prioritizing, but there are many other metrics out there in the performance toolbox. Each serves a purpose and can provide insight.

For example, First Contentful Paint (FCP) measures when the first text or image is painted – it’s earlier than LCP and can tell you when something, anything started to appear. Speed Index is another metric (often reported by Lighthouse) that expresses how quickly the content is visually populated, on average, during the load. There’s also Time to Interactive (TTI), which historically measured when the page became fully interactive – the list goes on.

The key thing to understand is that all these metrics are trying to quantify the user’s experience of speed in different ways. Some are more about perceived visual loading (Speed Index), some about technical milestones (DOMContentLoaded), and so on. The more you tackle optimization, the more you’ll dip into other metrics to diagnose specific issues.

Frontend performance doesn’t exist in a vacuum. Your backend performance matters a lot, too. Slow server responses, as we discussed with TTFB, will slow down everything on the front end. Similarly, if you have a headless architecture (say, a separate frontend calling an eCommerce API), the speed of that API directly affects your frontend metrics.

Backend and frontend are two sides of the same coin for the user’s experience. While you chase frontend optimizations, keep an eye on holistic performance. Profile your APIs, watch your database latency, and use monitoring tools for server-side performance as well. A truly fast user experience comes from optimizing end-to-end. If the backend is fast and the frontend is efficient, you win. If one of them lags, the user will notice.

On that note, if you’re in e-commerce, ensure your backend can handle your traffic – a lightning-fast React app means nothing if the backend falls over under load or adds 3 seconds of processing to each request. We’ve seen clients double their frontend speed but still see poor outcomes because, say, their checkout API was slow.

How to Measure Frontend Performance?

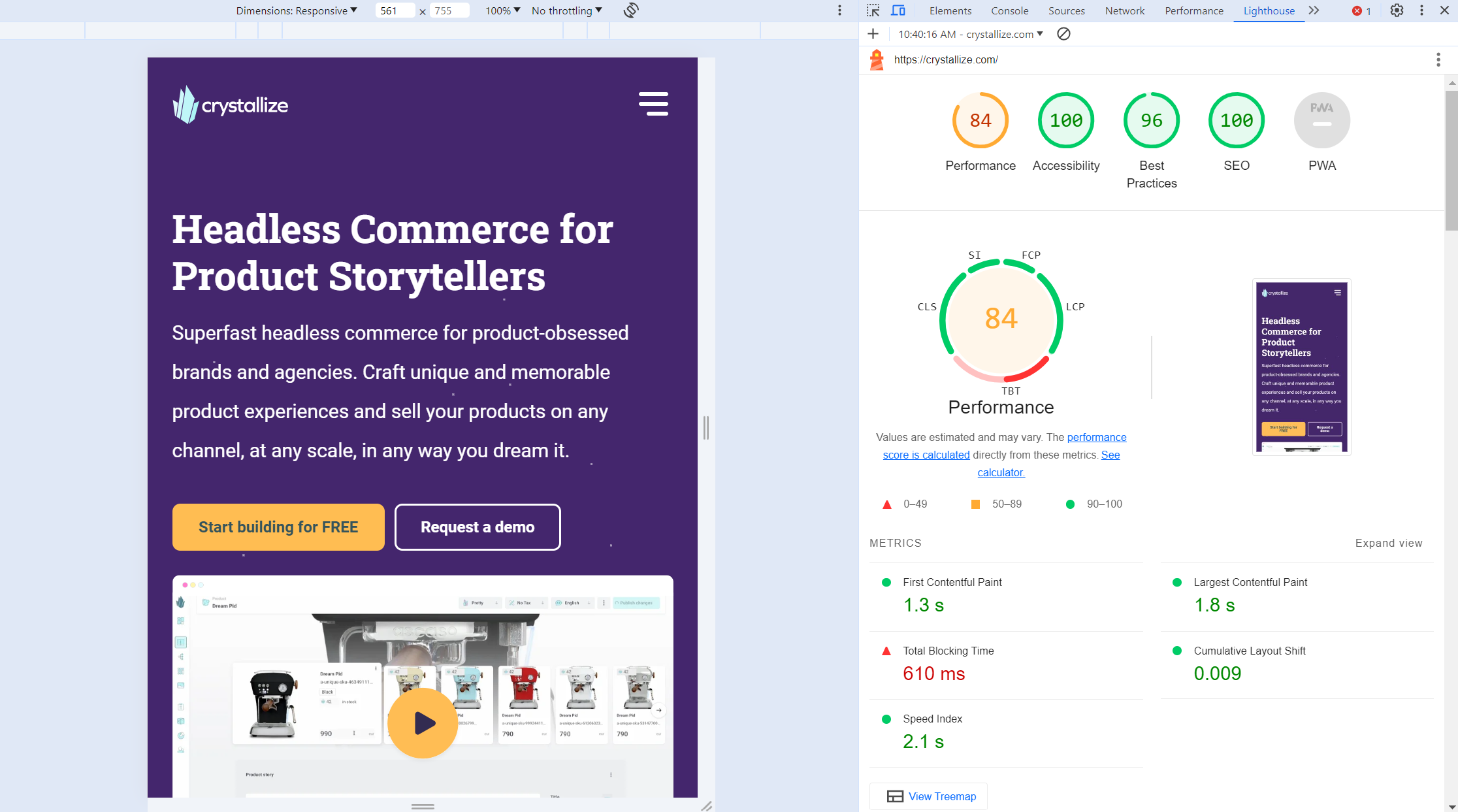

Measuring frontend performance involves collecting both lab data (simulated tests) and field data (real user metrics). Lab tests are run in controlled conditions to gauge performance after code changes, whereas field data comes from actual users in production. A number of tools can help you measure frontend performance.

For quick checks, Google’s PageSpeed Insights is a convenient web-based tool (powered by Lighthouse) that analyzes a given URL and provides performance scores with suggestions. Developers can also utilize Chrome DevTools (Performance or Lighthouse tab) to run audits on local builds – this yields detailed metrics (with Core Web Vitals highlighted) and flame charts for analysis.

You can also use Lighthouse Metrics to run Lighthouse from multiple locations to get valuable insights for your site across the world.

Google Search Console is a free tool that provides essential insights into your website's SEO performance. But, it also aggregates real-user Core Web Vitals (CWV) data from Chrome users via the CrUX report, which directly influences search rankings and the page experience ranking factor.

By adding your website to GSC, you can track keyword rankings, identify associated URLs, and diagnose technical SEO issues like CWV and crawlability. Search Console shows how CrUX data influences the page experience ranking factor by URL and URL group, meaning it considers URL-level CWV data when deciding where to rank you in SERPs.

For deep dives, WebPageTest offers advanced test runs from various global locations and returns rich details like waterfalls and filmstrips to pinpoint bottlenecks. Similarly, GTmetrix can analyze your site and provide a waterfall chart, speed visualization, and actionable recommendations.

On the real-user monitoring side, you can integrate the Web Vitals JavaScript library to measure metrics like LCP, CLS, and INP in your users’ browsers and send that data to an analytics platform (e.g., Google Analytics). It’s easy to use and integrates well with whatever frontend solution you might be running:

import {getLCP, getFID, getCLS} from 'web-vitals';

getCLS(console.log);

getFID(console.log);

getLCP(console.log);By combining lab and field measurements, you’ll gain a comprehensive view – using lab tools to test and optimize during development, and field data to validate real-world performance and catch regressions over time.

What About Frontend Performance Monitoring?

One-off tests are not enough; you also need continuous frontend performance monitoring to ensure your live application remains fast and healthy for users. As modern web apps grow in complexity, runtime performance issues or downtimes can severely impact user experience and business metrics.

Frontend performance monitoring is the practice of tracking your application’s performance metrics in real time (or at frequent intervals) once it’s deployed, so you can catch degradations early and ensure a seamless user experience. This typically involves Real User Monitoring (RUM) – collecting data from actual user sessions – as well as synthetic monitoring (scheduled test transactions). By monitoring continuously, you’ll notice trends, spikes, or slowdowns that might be missed in isolated tests.

For example, a new release might introduce a slowdown only under specific user conditions (such as a particular browser, region, or device), or external factors (like a third-party service lag) could degrade your app’s responsiveness. With a monitoring setup, such issues trigger alerts or appear in dashboards, allowing your team to react quickly before users churn.

Performance monitoring extends the measurement practice into production, giving you ongoing visibility into how your frontend performs and ensuring you maintain fast, consistent service for all users.

Frontend Performance Monitoring Tools You Can Use

There are many tools (both open-source and commercial) that can help monitor frontend performance. Google Lighthouse is an open-source auditing tool that can be run manually or in CI to track performance scores over time. While known for one-off audits, it can be scripted to monitor trends and also check other aspects like accessibility and SEO.

Sentry is another favorite of our open-source error tracking tool that also provides frontend performance monitoring features. Sentry’s performance tracing captures transactions (page loads, route transitions) and measures their durations. It offers a “Performance” view with transaction summaries, allowing you to drill into slow spans and view metrics such as Apdex scores for user satisfaction. This makes it a robust choice for engineering teams to catch frontend slowness and troubleshoot root causes.

We should also mention Pingdom and Sematext. Pingdom is a commercial service known for uptime and user experience monitoring. It provides real user monitoring (RUM) to gather page load times from your actual visitors, along with customizable alerts and detailed reports. You can also monitor specific transactions or check availability (with features like SSL monitoring) to ensure your site stays fast and reliable.

And Sematext is a monitoring platform (commercial) that includes front-end performance monitoring in its suite. It supports real-time alerts and tracking for major frontend frameworks, and even offers unified logs and metrics in one place. This means you can correlate front-end performance data with logs or back-end metrics for a full-stack view.

Finally, LogRocket. While primarily a user session replay and product analytics tool, LogRocket helps by recording users’ sessions (with console logs, network requests, and DOM state). It’s not a pure performance metric tracker, but by replaying sessions, you can visually see where users experienced slowness or UI jank.

Other notable mentions include tools such as New Relic, Datadog, and Dynatrace, which offer browser monitoring alongside backend monitoring, as well as open-source solutions like Grafana with Prometheus for custom metric tracking.

The key is to choose a tool that fits your stack and provides visibility into the metrics that matter (e.g., load times, Web Vitals, error rates) with minimal overhead. Whichever tools you use, set up dashboards and alerts for your frontend performance just as you would for server uptime – this proactive stance ensures you catch issues early.

Troubleshooting a Performance Issue

Even with monitoring in place, you will occasionally encounter performance problems – maybe a page is loading too slowly or a user interaction is laggy. Troubleshooting these issues systematically will lead you to the root cause faster. Here’s an expanded step-by-step approach:

1. Identify and define the issue. Start by observing your performance metrics and user reports to pinpoint what’s wrong. Which page or action is slow, and how slow is it compared to normal? Determine if the regression is global or isolated (specific browser, device, region, or a particular version release). Also, check if the issue is persistent or a one-time spike. By defining the scope (when, where, and for whom it happens), you’ll get clues on whether it’s a front-end problem (e.g., heavy script execution on the client) or something involving the back-end (e.g., slow API responses). Comparing current metrics to historical baselines is useful – if only the latest deployment exhibits the slowdown, it points to a recent code change or deployment issue.

2. Check the usual suspects. A large portion of front-end performance issues comes from a few common culprits: unoptimized third-party scripts, oversized images/media, heavy JavaScript execution, or blocking CSS files. Inspect these first. For instance, check if an analytics script or ad tag is taking a long time to load (network waterfalls can help here), or if an image was accidentally uploaded in full resolution, resulting in a huge file size. Use your browser’s developer tools Performance profile or Network tab to see resource timings. If the issue is CPU-bound (high Total Blocking Time or long tasks in the timeline), examine your JavaScript: a misbehaving loop or inefficient rendering may be freezing the main thread. If it’s visual (layout shifting or slow rendering), examine your CSS for large layout recalculations or style invalidations. Essentially, audit all the front-end assets and code paths that run during the slow transaction – many tools will flag large files or long tasks that degrade performance. Addressing these “low-hanging fruit” optimizations (compressing images, deferring non-critical scripts, etc.) often resolves the issue.

3. Verify caching and CDN usage. If your investigation reveals that every page load fetches all resources anew, or users repeatedly download large files, then caching may be misconfigured. Ensure that you have a proper browser caching strategy in place. Check response headers for static resources (images, scripts, styles) to see if they have far-future expiry or ETags – if not, set them up so browsers can reuse cached content on repeat visits. A healthy CDN setup means users get assets from a nearby edge server, reducing round-trip time. A misconfigured CDN or an asset not being served via CDN can slow down load times for users far from your origin server.

4. Examine server-side. Sometimes what appears as a front-end slowness is actually caused by the back-end or network. Particularly if you see high Time to First Byte (TTFB) or long gaps before content starts arriving, investigate your server performance. This may involve looking at API response times, database queries, or authentication calls that the front-end depends on. If an endpoint that the frontend calls is slow (say, the product search API takes 3 seconds), no amount of front-end optimization will fix that — you’d need to optimize the server code, database indexing, or caching on that API. Having a backend that’s built for that, like Crystallize, certainly helps.

5. Examine network factors. Consider network conditions: are users on slow connections or behind proxies? If a problem is region-specific, maybe a network routing issue or server location is at play. Use monitoring tools that break down server vs client time, or run tests from different locations (with tools like WebPageTest) to isolate if the slowness is due to back-end latency.

6. Implement fixes and validate the improvement. After hypothesizing the cause and applying a fix (such as compressing an image, removing a blocking script, or optimizing a slow query), always re-measure the performance to verify that the issue is resolved. Use the same metrics and tools from step 1 to compare before vs. after. If the performance is back to acceptable levels and the monitoring charts stabilize, then you’ve successfully troubleshot the issue. If not, continue iterating – there might be multiple contributing factors to address.

By following these steps – identify, check common causes, verify caching, investigate server, and validate fixes – you can methodically troubleshoot frontend performance problems.

Adopt Performance First Mindset

Frontend performance is not just a technical nice-to-have – it’s a fundamental aspect of user experience and a key driver of business success.

By defining clear KPIs and relentlessly optimizing for them, you empower your team to deliver a kick-ass, frictionless experience that delights users and drives outcomes.

Measure, monitor, and cultivate a performance-first culture: bake performance considerations into every feature decision (ask “will this slow us down?” as much as “does this function work?”). The payoff is a website that not only scores well on Google reports, but one that real users love to use – and that’s reflected in your bottom line.

Happy users = happy business. So let’s GO make the web faster, one millisecond at a time! 🚀

Deep Dives👇

Comprehensive Guide to Modern eCommerce Web Development: Trends, Approaches, and Best Practices

Explore the latest trends, development approaches, and best practices to build high-performing online stores in modern e-commerce web development.

Optimizing eCommerce Success: Best Practices for Web Development with Modern Frameworks

Unlock the potential of your online store with modern frameworks and eCommerce web development best practices for building a fast, user-friendly, and high-converting site.

The Cost of 3rd Party Scripts: Understanding and Managing the Impact on Website Performance

Third-party scripts are an essential part of websites as these enable your application to have a whole lot of functionality, such as website analytics, handling eCommerce transactions, etc. But with great power comes great responsibility, right?