Unlocking the Future of AI-Driven Product Data

How will Model Context Protocol (MCP) revolutionize PIM integration?

Artificial Intelligence has made significant progress in understanding and generating content; however, when it comes to retrieving the latest real-time data, such as structured product data from Product Information Management (PIM) systems, challenges remain. Today, AI agents often rely on brittle integrations, manual data mapping, or third-party connectors to fetch product specs, variants, media, or availability. These approaches are error-prone, hard to scale, and require ongoing maintenance.

Imagine a scenario where a conversational AI assistant needs to fetch the current inventory or detailed specifications from your PIM system. Without a standardized protocol, developers must manually define how AI queries map to database calls or API endpoints. This is not only inefficient but also limits the speed at which businesses can innovate with AI.

What Is MCP?

The Model Context Protocol (MCP) is a new open standard designed by Antrophic under MIT license, allowing AI agents to interact autonomously and securely with software systems—especially structured business systems like PIMs—without the need for manual integration or API-specific glue code.

Unlike traditional API-based interactions, where a developer has to explicitly define how each endpoint is accessed and what the schema looks like, MCP enables a self-descriptive, dynamic interface between intelligent agents and data systems. This means an AI model doesn’t just call an API—it understands the capabilities, schema, context, and constraints of the system it is communicating with. Moreover, this functionality may be implemented bi-directionally.

Another option is that an MCP-enabled AI may optimize your product organization or predict orders based on data and inform you, or even enable customers (represented by an AI agent) to place orders.

How Does It Work?

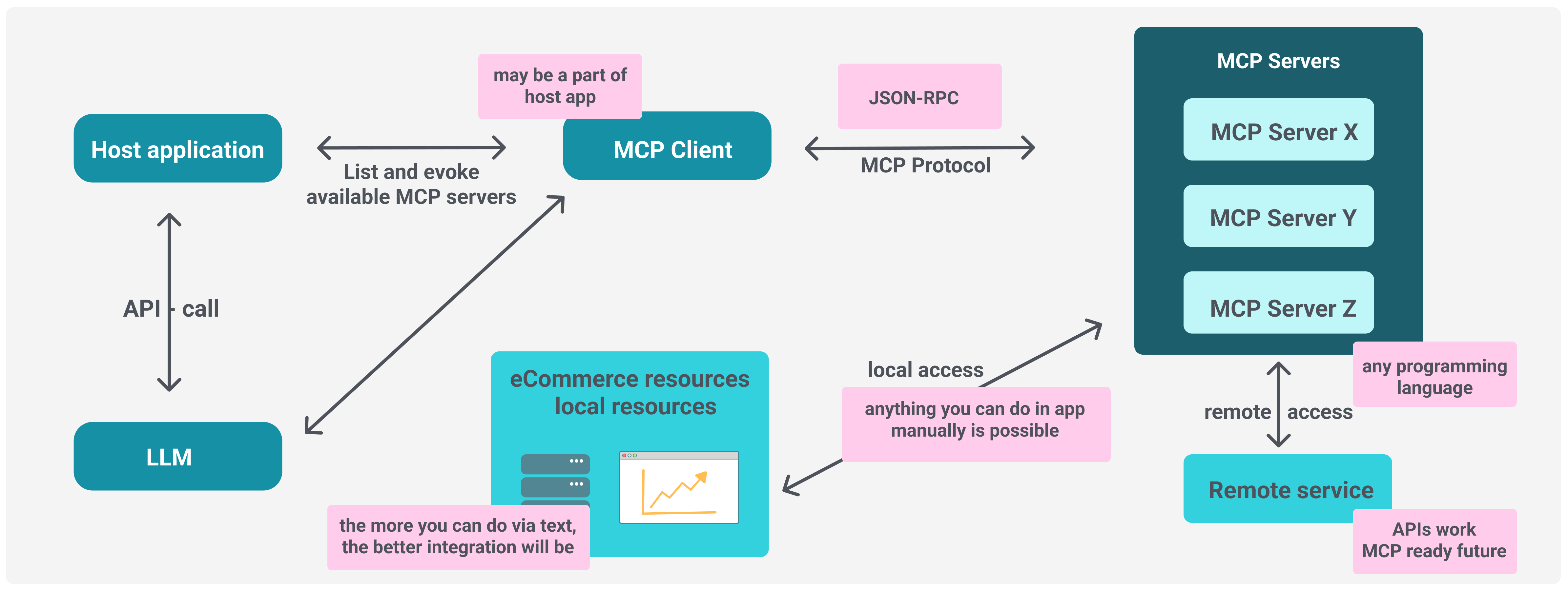

A very important fact is that you are in control of both your data and the access options available to others. Core components of the protocol are the MCP-Client and MCP-Server. The person who controls the MCP Server can select the shared data and define access rules.

In the graphic below, you can see an exemplary abstract view of the components involved in MCP communication. A host application communicates with a large language model (LLM) and retrieves additional data via an MCP client from one or more MCP servers. The MCP-Servers might then be connected with local data or remote data from other services, which it exposes by business logic and access restrictions.

Note that, depending on the LLM, it may directly use the MCP Client to retrieve data, or, similar to existing Knowledge-Base approaches, the application may retrieve data via MCP and provide it in the prompt context to the LLM.

Nowadays, MCP features are implemented as a standard in almost all major LLMs like ChatGPT and Claude. There are, however, some, like LLAMA that do not talk MCP yet. You should check if the model is capable of function-calling or tool-calling; if not, the model cannot be used in the MCP context.

Core Components of MCP

MCP defines a set of protocol-level abstractions and message formats that create a shared understanding between the offering service and the Large Language Model (LLM, aka AI-model). These include:

- Capability Declaration. Services expose their available operations (e.g., "list products", "get price", "update stock") as machine-readable descriptions, similar to OpenAPI but optimized for AI agent parsing.

- Schema Discovery. A MCP-client (such as an LLM) is able to query the data structure of a system—e.g., what fields define a "product", what value types are allowed, what are the constraints, enums, or relations. This allows zero-shot schema comprehension.

- Intent Resolution Layer. MCP provides a semantic layer that maps natural language intents to system operations. This enables AI systems to convert a user question such as “Show me the cheapest vegan shoes,” into a precise filter query without manual training.

- Secure Communication Contract. MCP includes mechanisms for authentication, authorization, and scope restriction, allowing enterprise-grade security and compliance. It supports token-based permissions and operation-level access control.

- Contextual Session Memory. MCP sessions can retain conversational or operational context. This enables multi-turn queries or dependent operations—e.g., “Now sort those shoes by newest arrivals.”

Design Philosophy

By formalizing how machines expose and consume operations, MCP eliminates much of the fragility of current AI–system integrations. It’s less about APIs and more about conversations between intelligent systems.

Key design concepts are:

- AI-Native. Built with the assumption that the primary consumer is an AI agent, not a human or a frontend application.

- Composable. Works seamlessly across various modular systems, including headless PIM, CMS, DAM, and eCommerce platforms.

- Interoperable. Protocol-agnostic in transport—can be used over HTTP(S), WebSocket, or embedded in GraphQL wrappers.

- Secure by design

Data Security becomes even more important when enabling the AI to access data and execute controls in real time. Therefore, MCP comes with a “based on security” approach. Its architecture isolates context and enforces strict boundaries between the AI (model) and external resources, ensuring user data remains private and under user control.

Communication is secured with robust encryption and authentication measures.

While a standard case will be that the LLM Server is controlled by an “application provider” that controls access permissions and tooling, a special case of MCP-usage is the usage of an LLM-Fat-Client to access and control the local machine of the end user. An example for this use-case would be an MCP-enabled LLM that is able to support you while coding in Visual Studio reading and modifying source code during the AI-conversation. While the user experience might be similar to current Co-Pilot approaches, the implementation is better standardized and enables easier integrations for tool providers.

By design, an MCP-server is limited to specific tools and data integration it can use or access. In the coding support case, the protocol mandates explicit user consent and fine-grained access controls to prevent unauthorized data access given by the end user. This helps in protecting against data leakage, cross-context contamination, and prompt injection attacks. Security features, along with compliance-oriented practices (like audit logging and alignment with standards), make MCP a secure framework for context-sharing between models and clients in enterprise environments.

Bård Farstad, CEO of Crystallize

Imagination: What May Be the Use Cases for MCP in Crystallize

The quote above provides a great segue to discuss potential use cases with Crystallize; let’s explore some of them.

- Better Product-Content. With MCP, more context can be provided to LLM interactions, so that the quality of product translation generation and translation can be improved. More context might also help to gain better data validation by AI on human or AI-generated content.

- Search and Navigation. With MCP, semantic search queries can be paired with a language model that dynamically extracts context-sensitive filters from your site or software. This helps customers find exactly the products that match their current needs quickly and efficiently.

- Dynamic Product Descriptions and Recommendations. Thanks to MCP, your shop system can send product information, pricing, availability, and media assets to an AI model in real time. The AI can then automatically generate relevant product descriptions, cross-selling and up-selling suggestions, or answer customer questions about your assortment and availability.

- Headless Shopping. After finding the right product via MCP, the actual purchase order can be placed directly by an AI agent. Potentially, it could be fully autonomous without any human review. This would enable the next step for a headless PIM system to provide a fully headless customer shopping experience – Zero UI.

- Optimized Marketing Copy. When you have knowledge about one or multiple customer target groups that you want to address, the presentation of product content and surrounding commercial content can be adjusted to an optimum for each target group. You can achieve this either with pre-generated content or you can try to use MCP dynamically to adjust to the current viewer of a commercial or product-detail page in real-time, with the most up-to-date generation context.

Outlook: MCP with Crystallize

Crystallize, as a headless PIM and commerce platform, is well-positioned to embrace MCP. Its rich GraphQL API provides flexible data modeling, real-time content delivery options, and makes it a natural match for AI-first architectures.

MCP could be layered on top of Crystallize’s existing APIs, allowing AI agents to:

- Discover product shapes and components dynamically

- Access contextual pricing and subscription models

- Seamlessly retrieve marketing content and assets by natural language

- Interact with inventory and fulfillment logic in a secure, abstracted way to allow AI-Agent to place orders on behalf of humans.

By integrating MCP, Crystallize can enable AI systems to self-serve product data, reducing development complexity and accelerating time-to-market for AI-powered features—from chatbots to personalization engines to shopping agents.

Conclusion

You might ask: Okay, but why are you telling us this?

Boost revenue and improve customer experience by leveraging AI to sell the right products at the right time. AI can also facilitate direct customer engagement, fostering business growth.

MCP is applicable by small businesses as well as huge enterprise retailers or companies that really have numerous orders a day or even per hour. It enables every shop to provide real-time support and suggestions while customers browse your e-commerce shop. Orders that do not match (e.g., tools for home and gardening) can be corrected with the help of AI. And people who did not think of all the additional articles that are needed for a specific use-case, will get suggestions on what might need to be added to have a sensible purchase.

MCP is not a topic of the future - it is already real now.

Those who integrate it early will create an enormous economic advantage: better product experiences, smarter suggestions, and automated orders. Crystallize is prepared. Are you?