React-based Static Site Generators in 2025: Performance and Scalability

React-powered static site generators (SSGs) combine the best of static publishing with dynamic capabilities. As we head into 2025, several frameworks stand out for their features, performance, and developer experience.

Recent State of JS 2024 survey data shows that developers are increasingly performance-conscious and open to new tools in and out of the React ecosystem. Next.js still tops usage charts, but its interest and satisfaction scores have cooled slightly as it “follows a similar trajectory” to React (still widely used, but no longer the shiny new thing).

There is no one-size-fits-all solution but a spectrum of tools optimized for different priorities. A content-heavy blog maintained by a small team might thrive with a simple static-oriented tool like Astro or even Gatsby (remember that one!?). At the same time, a large enterprise dashboard would favor Next.js for its flexibility.

Unlike previous iterations of this article, we’re casting a wider net today, covering a bit more than the title suggests. There are many reasons for this, but mainly, we want you to consider how “React-dependent” you want your stack to be while waging solutions.

If you love React and want to stick with it everywhere, Next.js or Gatsby or Docusaurus let you stay in that ecosystem. If you mainly want to deliver content fast and don’t mind a more framework-agnostic approach, Astro or Remix… sorry, we mean React Router v7 is likely your friend. And if you’re feeling adventurous about the future of web frameworks, you might experiment with Waku, Tanstack, or Qwik’s new paradigm to get that edge in performance. The good news is that the React ecosystem is broad – you can mix and match (for example, embed React components in Astro or use Qwik for parts of an Astro site.

All of these frameworks are converging toward better performance and developer experience, so one can feel confident picking the one that fits their workflow.

Image source: State of JS 2024 survey.

Hybrid frameworks and React Server Components

In 2025, the industry is converging on hybrid rendering as a best practice. No single approach to web rendering (pure SSR or pure SSG) fits all needs, so frameworks offer flexibility. Next.js has long supported SSR and SSG and now even have Partial Pre-rendering!; Astro supports SSG and SSR on a per-route basis and focuses on Partial Hydration (Islands Architecture); React Router v7 (former Remix) now proposes SSR and SSG; TanStack Start is built around SSR, supports streaming SSR and can be configured for SSG.

This hybrid trend means React SSGs are no longer strictly “static site only”—they let you mix and match. React Server Components (RSCs) are a major paradigm shift influencing all React frameworks. RSCs allow one to render components on the server at build or request time and stream HTML to the client without needing hydration.

The next generation of React SSGs will use RSC to pre-render content and only hydrate very interactive pieces on the client. This may narrow the gap between something like Next.js and Astro. When Next.js renders mainly on the server with RSC, the result (in terms of client load) is similar to Astro’s islands.

Additionally, edge computing is now part of the conversation: hosting providers (Vercel, Cloudflare, Netlify or indeed options like Deno with Deno Fresh) enable SSR at the edge nodes, which means global low-latency responses. This has made SSR more appealing since it can be done geographically close to users to mimic the performance of static files.

Resumability and partial hydration (new paradigms)

Astro pioneered partial hydration for React sites – its island architecture lets you hydrate only specific components on the client and leave the rest as static HTML.

On the other hand, Qwik’s resumability takes things a step further: it avoids hydration altogether. The idea is to serialize the application state into the HTML and resume running it on the client without reloading any of the code already used on the server.

Both trends could redefine how we think of hydration altogether.

❓Static vs. Dynamic Pages / Websites

For the sake of clarity, let’s define these. Static pages are served from a server or CDN, but they are not dynamically rendered on a server, i.e., you always get the same pre-built (HTML, CSS, and JS) page. You change page content with the JavaScript code executed in the browser (not the server).

On the other hand, dynamic pages generate content/pages with the help of a server or a serverless function for each page request. That means that each time you refresh a page, you’ll be served with a newly generated page that may differ from the previous one.

We already talked about what is a static site today in depth. Go, juggle your memory.

Top React Static Site Generators for 2025

This post provides an in-depth look at six SSGs expected to shine: Next.js, Astro, Remix, TanStack Start, Qwik, and Docusaurus. We’ll compare their key features, pros and cons, real-world adoption (using data from 2024 surveys and GitHub), performance metrics, and future outlook. (Each tool is complex enough for its own article; here, we’ll offer an overview and note where deeper dives might be warranted.)

We're not here to tell you which framework is right for you. If you’re a fan of Qwik (or indeed any of these mentioned or unmentioned here) and believe it’s the best tool for solving your problem, then go for it!

We hope the information we share here gives you the confidence to make a more informed decision, no matter which direction you're headed.

Next js: a Versatile React Framework

Next.js is a versatile React framework for building static sites and dynamic web applications. Vercel has supported it since its release in 2016. Next.js provides a hybrid rendering model, including static site generation (SSG), server-side rendering (SSR), incremental static regeneration (ISR), Partial Pre Rendering (PPR) leveraging React Suspense and traditional client-side rendering.

It offers file-system routing (drop a page file in the pages or app directory, and you get a route), built-in API routes for serverless functions, and rich performance optimizations like automatic code-splitting and image optimization. Next.js 13+ introduced the App Router with React Server Components and Server Actions (server-side form and data handlers) to streamline full-stack development. These capabilities make Next.js a full-stack React framework suitable for everything from content sites to complex interactive apps and Next.js e-commerce storefronts.

Pros: Next.js’s biggest strength is its flexibility and maturity:

- Hybrid Rendering: It supports SSR for dynamic content and SSG for static pages in one project, even per-page. This unmatched flexibility lets developers choose the best rendering strategy per use case.

- Ecosystem & Tooling: Next.js has a vast community and ecosystem. It’s widely adopted, with many starters, plug-ins, and examples. Its community size translates to abundant learning resources and third-party libraries.

- Performance Focus: Next.js is continually improving its performance. Since version 13, it has used a Rust-based bundler (Turbopack) and React Server Components for less client JS. Next.js 15 leverages React 19 features and fully adopts Turbopack for faster builds and a dev server.

- Full-Stack Features: Out-of-the-box API routes and middleware allow building backend functionality alongside the frontend. This eliminates the need for a separate server, appealing to teams that want an all-in-one solution.

Cons: Despite its strengths, Next.js has some trade-offs:

- Complexity: With so many features, Next.js can feel heavy for simple static sites. Its SSR model, while powerful, can be less straightforward than a pure static setup. Some developers find Remix’s more straightforward SSR approach more flexible and “built-in”

- Server Resource Usage: SSR in Next.js can be intensive. Dynamic, data-heavy pages rendered on each request may strain server resources, requiring careful optimization or beefier infrastructure.

- Static Generation Limits: Next.js generates static pages well, but build times and memory usage can become a concern at vast scales (e.g., tens of thousands of pages). The team introduced ISR to rebuild pages on demand, but massive sites might hit limits or require workaround strategies.

- Magick: Next.js has many magic features; the simplest one is Server Actions with Forms. While this is nice to improve Developer eXperience to such extent, it has a downside: it is harder to understand what’s happening and why.

Popularity & Usability. Next.js is effectively the flagship React framework today. It’s the only React framework with high usage and high retention among developers, meaning people try and stick with it. Many high-profile companies use Next.js in production (e.g., Hulu, TikTok, Netflix, Twitch), and its GitHub repository has over 110k stars (a metric of interest on Rising Stars reports). If you know React, Next.js is relatively straightforward to get started, and the learning curve is moderate.

Performance. Next.js offers solid runtime performance, especially with the latest optimizations. SSR can deliver fast first paint for users (without waiting for client JS to render initial content), and features like automatic code splitting and image optimization improve page speed. Don't just take our word for it. Check out our new Next.js eCommerce boilerplate we built for fast and perfomant storefronts.

Astro.build: Framework for Content-driven Websites

Astro is a newer framework (first released in 2021) focusing on content-rich websites and a concept called Islands architecture. Astro’s approach is to render the bulk of the page as static HTML (no JS by default), and load interactive components as needed in isolated “islands”. Importantly, Astro lets you bring any UI framework to those islands – you can use React, Svelte, Vue, Solid, etc., inside an Astro project.

This makes Astro very flexible: Teams can write content in Markdown/MDX and sprinkle interactive widgets written in React or others. Astro has first-class support for Markdown content and even a new Content Collections API (in Astro v2-v3) for type-safe data from local files. Astro v5 (late 2023) introduced a Content Layer to easily enable type-checked content and data fetching from any source (local or external) , enhancing Astro’s use for blogs, documentation, and marketing sites.

While Astro is primarily an SSG, it can also do SSR if needed. However, its sweet spot is static site generation for speed and SEO. It also has a rich integration ecosystem (for CMSs, image handling, sitemaps, etc.) and supports custom server endpoints if you need dynamic API routes. While it might not be the usual use case, don't exclude Astro for e-commerce.

Pros: Astro’s strengths have made it a rising star, especially for content websites:

- Minimal JavaScript = Great Performance: Astro renders pages to static HTML and, by default, does not send JavaScript to the browser for that content. Interactive components are hydrated only when necessary. This “zero JS by default” approach leads to extremely fast load times and excellent Core Web Vitals in production.

- Islands Architecture: Astro’s partial hydration (islands) model means different page parts can hydrate independently and in parallel. High-priority interactive components become usable faster without waiting for the whole page’s JS bundle.

- Flexible and Framework-agnostic: You’re not locked into React alone. Astro lets you use React for one widget, Svelte for another, or plain HTML/Alpine, etc., all in one website. This makes it easy to embed third-party components or gradually adopt Astro for parts of a site. The Astro component syntax (.astro files) is similar to HTML/JSX and easy to learn, and you can always drop down to raw JSX if needed.

- Developer Experience: Astro has earned praise for being beginner-friendly. You can start with mostly HTML/Markdown and no complex setup. The learning curve is gentle (one can build a site with basic web knowledge), yet Astro doesn’t exclude advanced users—it supports TypeScript, JS frameworks, and numerous integrations. Hot reloading, Vite-based tooling, and a growing community make Astro enjoyable to work with.

- SEO and Static-First Benefits: Because pages are pre-rendered, they are SEO-friendly out of the box (each page is an HTML file ready to crawl). Content sites can easily add meta tags, JSON-LD, etc., without worrying about hydration. Astro also supports features like RSS, sitemap generation, and i18n via integrations.

Cons: Astro’s focus on static content means it’s not the best fit for every scenario:

- Not Ideal for Highly Dynamic Apps: Astro might feel limiting if your project involves an application with a lot of client-side state or frequent real-time updates (think a dashboard or chat app). It wasn’t designed for complex single-page app interactivity or multi-page state sharing. Astro doesn’t maintain client-side state across pages (each page is an MPA reload).

- Ecosystem Maturity: Astro is relatively young. Its ecosystem of plugins and starters, while growing quickly, is smaller than Next’s. You might find fewer off-the-shelf solutions for particular needs. While you can use React libraries within Astro islands, not all React libraries expect to run in an Astro context (minor interoperability quirks can occur).

- Build Time for Very Large Sites: Astro’s build is generally fast (often faster than Next for equivalent sites), but if you push it to extremely large content sites (tens of thousands of pages), build time can still add up linearly.

- Learning New Syntax: Astro files use a special syntax (similar to Svelte/Vue single-file component style) with a clear separation between frontmatter script and template. It’s straightforward, but it’s another thing to learn, and you need to understand the hydration directives for interactive islands. React devs will also need to adjust to the concept that not everything is a component; much of your Astro page is just static markup by design.

Popularity & Usability. Astro has rapidly gained a following. In the linked State of JS surveys, Astro has shown high satisfaction scores (many who try it love it) and rising awareness. It is already used in production by sites like Inbox by Gmail (archive), OpenAI’s cookbook, and many content websites. An easy onboarding marks its usability: one can

npm create astro@latest and have a site running in minutes. The framework’s “server-first” philosophy (as the Astro homepage says, “server-side rendering by default, zero JS by default”) resonates with developers frustrated with heavy SPAs for simple sites. Documentation and community support (Discord, etc.) are very active, making Astro quite approachable even for devs new to React or modern build tools. Astro generates a “true” static website it does not require Node if you only do Static. It has to be compared with Next.js export.

Performance. Astro’s performance is arguably its biggest selling point. With its default behavior of shipping almost no JavaScript to the browser, Astro pages load extremely fast. Common metrics like Time to Interactive (TTI) and Largest Contentful Paint (LCP) tend to be excellent on Astro sites because the browser isn’t busy executing large JS bundles on load. Astro also benefits from using Vite under the hood for fast HMR (development is speedy) and efficient production builds.

Build times are generally very good. At runtime, the content is just static HTML/CSS, so it’s as fast as it gets. Only the interactive parts incur any client-side cost. Those parts can use frameworks like React, so their performance is equivalent to that framework running in isolation.

Astro excels at static content sites, such as marketing pages, blogs, documentation, and portfolios—any project where most content is static or only lightly interactive. But amazingly, it works well with commerce 👉 Hands-On Experience: eCommerce Store with Astro?

React Router v7 (Remix.Run)

Remix or React Router v7? The end of 2024 brought the change. So, people are wondering if it is one or the other or both? The most straightforward answer is that they are closely related but serve different purposes and operate at different layers in the web application stack.

Remix is a full-stack React framework that embraces web standards and server-rendered workflows. Created by the team behind React Router and first released in 2020, Remix positions itself as a server-centric React framework. It uses React Router under the hood and introduces the concept of nested routes and layouts at the framework level – allowing complex UI layouts to be represented in the URL hierarchy. Remix’s hallmark features are its Loaders and Actions, conventions for data fetching and mutations tied to routes. A Loader is a function that runs on the server before a route renders, providing data for the component, and an Action handles form submissions or other mutations on the server. This approach means most of your data-loading logic lives on the server side by default, enhancing security and avoiding exposing sensitive data in the client.

Remix also supports streaming SSR (delivering HTML in chunks for faster display) and progressive enhancement (it encourages the use of <form> and <button> so that even without JS, basics still work).

Out of the box, Remix projects have no extra client-side state management library—you rely on server-render + browser navigation for most interactions, which is a different paradigm from heavy client SPA frameworks. Thanks to its adapter architecture, Remix can also be deployed to various environments (Node, serverless, Cloudflare Workers, etc.). Notably, Remix was acquired by Shopify in 2022 and has influenced Shopify’s Hydrogen framework (now Hydrogen is built on Remix). It does not mean you can use Remix with other e-commerce platforms. This backing ensures ongoing development and integration with all modern edge platforms.

Pros: Remix has attracted developers who value its unique philosophy and developer experience:

- Elegant Nested Routing & Layouts: Remix makes it straightforward to build complex, multi-level layouts (e.g., a sidebar layout nested in a main layout, etc.) with its routing structure. Because each route can have its loader and partial UI, you naturally get code splitting and can reload data for just that portion of the page. This simplifies managing complex navigation and code reuse across pages. What used to be tricky in older Next.js (pre-App Router), like nested layouts, is now a first-class concept in Remix.

- Simplified Data Handling: With Loaders and Actions, data fetching and mutations follow a convention that’s easy to reason about. The framework handles fetching loader data on the server, caching it, and providing it to your components. This means no manual data-fetch code in components or extra libraries for SSR data hydration – Remix does it for you. The result is fresh data on each request (suitable for dynamic sites) and a straightforward mental model for forms and submissions (you use standard HTML forms or fetch and define an Action to process it). This leads to a streamlined developer experience with minimal configuration; you write routes and functions, and things work. Many devs appreciate the “just use the platform” approach (e.g., leveraging <form> instead of custom JS for interactions).

- Great Developer Experience & Conventions: Remix has clear conventions that reduce boilerplate. There is minimal config; most things are just code (routes as files, loader/action exports, etc.). It handles a lot for you (like preventing sensitive data from leaking to the client, handling errors through route boundaries, etc.), so you can focus on building features. Developers often cite Remix as a framework that feels nice to work with – it’s opinionated in a way that avoids bikeshedding. Also, React developers find the router API familiar because it builds on React Router. Hot reload works seamlessly for both client and server changes.

- Progressive Enhancement & User Experience: Remix’s architecture ensures speedy initial loads. Every page is server-rendered by default, so users get HTML content quickly. Then, any further navigation can happen via client-side transitions (Remix will load the next page’s data via fetch and swap in the content, avoiding a full reload – effectively an SPA navigation). This yields fast subsequent page transitions without sacrificing the initial load or SEO. Remix also supports streaming responses, meaning it can send down pieces of the HTML as they’re ready (useful for very slow data sources).

Cons: There are trade-offs and limitations to Remix’s approach:

- Smaller Ecosystem and Community: While growing, Remix still has a more niche community than Next.js. Actually, that might have been true for Remix. Now, React Router v7 has fewer ready-made examples or starter templates, and some third-party libraries might not have Remix-specific guidance. The number of plugins or integrations is increasing (and React Router convergence helps).

- No Native SSG (Static Export): Unlike Next export or Astro, Remix doesn’t natively output a static site from a build (since it assumes a Node or edge runtime to run loaders on demand). If you need a pure static site (no Node server at all), Remix isn’t the obvious choice. There are workarounds – for pages that don’t change, one could prerender them manually or use third-party tools – but out-of-the-box, Remix expects to run as an application, not just serve prebuilt files. This means that for purely static scenarios (like documentation sites or mostly-static marketing sites), Remix might be less convenient than a true static generator.

Popularity & Usability. Remix’s popularity saw a bump after the Shopify acquisition and the hype around its release. It’s used by notable companies: Shopify (Hydrogen), of course, and others like NASA (for a mission alert system), Docker (for parts of their web infrastructure), and your favorite AI ChatGPT as well 😀

One advantage Remix has is that its DNA is now in React Router – meaning concepts like loaders are becoming familiar even to non-Remix React devs. Usability-wise, if you follow Remix conventions, it feels coherent and straightforward.

This brings us to React Router v7, a routing library that manages client-side navigation in a React application.

React Router is primarily concerned with client-side routing and handling URLs. It doesn't directly handle SSR, data fetching, or full-page rendering; it’s meant to be integrated into other tools that manage server-side rendering (like Remix or Next.js).

React Router does not have any built-in mechanism for data fetching. It’s up to the developer to integrate React Router with other libraries (like fetch, axios, or data-fetching hooks) to handle data management. It focuses purely on routing and navigation, leaving the data fetching logic to be handled separately in the components.

It is primarily for client-side navigation and isn’t concerned with deployment. It is agnostic to where the application is hosted (whether it’s on a CDN, a serverless platform, or a traditional server), but it requires the app to be preloaded on the client side first.

Popularity & Usability. React Router v7 is the go-to routing library for most React applications. It is deeply integrated into the React ecosystem and is widely used across open-source and enterprise applications. On its own, React Router is ideal for building single-page applications (SPAs) where client-side routing is the primary focus.

TanStack Start: Full-stack React and Solid Framework

TanStack Start is the newest entrant on this list and represents a fresh take on React full-stack rendering. It’s built by Tanner Linsley and team (known for TanStack Query, TanStack Router, etc.), and as the name implies, it builds on the TanStack Router library to provide a higher-level framework. TanStack Start provides a lightweight server-side rendering layer, isomorphic data fetching, and advanced routing out of the box.

In essence, it provides the building blocks of Next.js/Remix-style apps but in a more unbundled, flexible form. Key features include full-document SSR (the whole HTML is rendered on the server), streaming responses (for progressive rendering), server functions (similar to API routes or server actions for running back-end logic), and isomorphic loaders (TanStack’s version of fetching data seamlessly on both server and client).

TanStack Start is built on modern tooling: it uses Vite for the client build and Nitro (from the Nuxt.js ecosystem) as a server engine. Nitro provides features like static prerendering and the ability to deploy to various targets (Node, edge, serverless) with minimal config. This means TanStack Start apps can be deployed virtually anywhere, similar to Remix’s adapters.

TanStack Start also emphasizes TypeScript support and integration with TanStack Query for managing caching and state. If you love the TanStack libraries (Router, Query, etc.), Start ties them together so you can quickly “start” building an entire app.

Pros: Although young, TanStack Start offers some enticing benefits:

- Modern, Flexible Architecture: TanStack Start is less monolithic than some frameworks. It feels more modular because it’s built from a composition of libraries (TanStack Router, Query, etc., plus Vite and Nitro). This can mean more flexibility when swapping pieces or configuring behavior. The framework aims to be unopinionated about how you fetch data – you can use the built-in loaders, TanStack Query, or both.

- Isomorphic Data Fetching and TanStack Query: The approach to data fetching is influenced by Remix (loaders) but supercharged by TanStack Query. It provides isomorphic loaders (functions that run on the server initially and then can run on the client for subsequent transitions). Additionally, it seamlessly integrates TanStack Query for caching. This means you get features like automatic data caching, cache invalidation, and real-time updates out of the box if you use Query. This can greatly improve the developer experience for apps with lots of dynamic data than manually managing global state or using simpler loader patterns.

- Performance via Vite and Streaming: Using Vite as the build tool means lightning-fast HMR and efficient bundling. Developers coming from Webpack-based frameworks will find Start’s dev environment very quick. For SSR, the support for streaming means better TTFB for users (similar to Next and Remix’s latest capabilities). And since Nitro is under the hood, Start can pre-render pages to static files if desired (for truly static routes) and do edge rendering efficiently.

- TanStack Ecosystem Benefits: The TanStack libraries (Query, Router, Table, etc.) are known for their quality and performance. By using them as building blocks, you’re not reinventing wheels or waiting for framework-specific solutions – you’re leveraging well-tested independent libraries.

Cons: Given its youth, TanStack Start has some clear challenges:

- Early Stage / Maturity: As of 2025, TanStack Start is brand new and possibly still in beta. That means you might encounter rough edges or changing APIs, and early adopters need to be comfortable with that. The documentation is evolving, and community knowledge is limited since few large apps have been built with it yet. Production use would require caution until it stabilizes.

- Small Community (for now): Unlike the others on this list, Start doesn’t (yet) have a large user base. Initially, community support, examples, and third-party tutorials will be sparse. People will likely discuss it alongside TanStack Router/Query topics but expect fewer resources than Next or Remix. You might solve specific problems on your own until the community grows.

- No Big Corporate Backer (yet): It’s an open-source project led by a respected developer (Tanner Linsley), but Vercel, Shopify, or Meta do not back it. This isn’t necessarily a con, but the development pace could depend on a small team’s bandwidth or sponsorships. TanStack libraries have thrived as community-driven projects, so Start could, too.

- Feature Parity Still Emerging: The Start’s goal is ambitious: combine features of Next.js and Remix in a lighter package. It already checks many boxes (SSR, streaming, data, etc.), but there may be features it lacks that mature frameworks have (for example, Next’s Image optimization, a built-in auth solution, etc.). As an early adopter, you might need to assemble some parts yourself (which, if you like flexibility, is fine, but it’s more effort). Over time, we expect integrations or recipes to fill these gaps.

Popularity & Usability. It’s a bit early to gauge real-world popularity. However, the React community buzz around TanStack Start has been notable. If you are familiar with TanStack Router/Query, adopting Start is straightforward—it essentially layers SSR and deployment on top of those. One can scaffold a new app, define routes, and get going quickly. The fact that it’s built on familiar tools (Vite dev server, etc.) also means the local development experience is pleasant.

Performance. The performance potential of TanStack Start is very high. With SSR and streaming, low latency first renders can be achieved. Using Nitro (from Nuxt) for the server means it has sophisticated bundle splitting for server functions and can tree-shake unused code from the server build. In practice, a TanStack Start app should perform similarly to a Remix app (both use Vite and do SSR) or even better in some cases due to TanStack Query’s intelligent caching. Build times are fast thanks to Vite. Also, because Start can prerender routes to static HTML (leveraging Nitro’s prerender feature), it can serve a hybrid of fully static content and SSR for dynamic parts – optimizing the performance where possible.

Another aspect is bundle size: TanStack Router is lightweight, and you only pay for what you use. A simple TanStack Start page might send minimal JS (just React + Router and your page code). That could mean smaller bundles than an equivalent Next.js page, which includes Next’s hefty runtime. However, this is speculative until benchmarks are done. Developer experience performance (iteration speed) is excellent due to Vite’s HMR. Overall, given its modern foundation, TanStack Start will be competitive in build and runtime performance with the best of this list.

If anything, its performance will hinge on how well it can leverage Nitro to handle edge cases and how optimized TanStack Router’s hydration is. However, those are solvable problems. For now, think of Start as having the performance of a well-tuned custom React SSR setup—which is to say, very good.

TanStack Start is a newcomer that combines the strengths of existing libraries into a cohesive framework. It’s like getting a custom React toolkit (Router + SSR + Query) without assembling it all from scratch.

Qwik: A New Kind of Framework

Qwik is a bit of an outlier in this list – it’s not based on React at all, but it targets the same problem space of building interactive websites, and it’s often mentioned alongside React frameworks due to its novel approach. Qwik introduces the concept of resumability. Its primary goal is to deliver instant interactivity by avoiding the costly hydration process that React (and others) use. Qwik applications can be SSR rendered to HTML, and then instead of hydrating (re-executing component code on the client), Qwik can resume the app from where the server left off by using serialized state and listeners. Qwik can act as an SSG: you can pre-render pages at build time (SSG) or render on the fly (SSR), similar to other frameworks.

In practice, Qwik splits your application into many small chunks of JavaScript and only loads code for a component when it’s needed (e.g., when a user interacts with it). This is achieved via a specialized compiler and a framework API that uses things like $(...) to mark lazy-loadable boundaries.

Qwik City is the meta-framework for Qwik (analogous to Next for React) that provides routing, static site generation, and other app-layer features. Qwik City allows you to do file-based routing, use endpoints (serverless functions), and generate a fully static site if needed. With Qwik City, another notable feature, given Qwik’s origin, is integration with Partytown (an offshoot library that offloads third-party scripts to web workers) to further improve performance by isolating heavy scripts. In summary,

Pros: Qwik’s advantages mostly revolve around its performance paradigm:

- Instant Interaction, No Hydration: Qwik’s resumability means a Qwik site, when loaded, doesn’t immediately execute a bunch of JS to become interactive – it’s already interactive. The listeners for events were attached via SSR output. So, when a user clicks a button, Qwik will load the code for that button’s handler on demand and execute it. This yields fast First Input Delay (FID) and Time to Interactive (TTI) scores, as there is essentially no large blocking hydration step. For users on slow networks or devices, this is a game changer – the page becomes usable much faster compared to a typical React hydration approach.

- Fine-Grained Code Splitting: Qwik automatically splits your app into many tiny chunks, and uses the browser’s ability to fetch those chunks when needed. This fine-grained lazy loading means even a large app can load on the client with minimal initial JS. It’s a step beyond what code-splitting in Webpack or Next does, because Qwik can split at the level of individual component effects or event handlers.

- SSG and SSR Flexibility: Despite being a new approach, Qwik doesn’t throw out the best practices of SSR/SSG. Qwik City can pre-render pages at build (giving you static files and all the SEO benefits), and then seamlessly allow those pages to become interactive via Qwik’s lazy loading. In a sense, Qwik offers the holy grail: the performance of a static site (since it’s pre-rendered and doesn’t force a big JS load) combined with the interactivity of an SPA when needed. And because it’s SSR by nature, personalization or per-request data can also be handled (though fully dynamic SSR might reduce some of the performance edge if not cached).

- Forward-Thinking and Influence: Adopting Qwik now puts you ahead of the curve on the future of web frameworks. Its ideas influence others; for instance, React itself explores similar territory (React Server Components + React “use” hook and even talks of resumability in the future). Developer experience in Qwik, while different, is pretty nice if you’re comfortable with hooks (it has a familiar feel, albeit with a different twist on useEffect, useSignal vs useState, etc.).

Cons: Naturally, Qwik comes with considerations that might make it a less straightforward choice:

- Learning Curve & Mental Model: Qwik’s programming model, while using JSX, is not the same as React’s. Developers need to learn Qwik-specific concepts like $ (to mark lazy execution), useSignal (Qwik’s reactive state primitive), useContextProvider (slightly different from React’s Context API), and the idea that code might run on either server or client seamlessly. In some ways, it’s closer in spirit to frameworks like Solid, and it can take time to internalize resumability. Debugging can also be a new experience because the code doesn’t all run in one place – part ran on the server already

- Ecosystem & Maturity: Qwik is still young. The ecosystem (libraries, UI component sets, etc.) is much smaller. The community is enthusiastic but small compared to React’s. Issues and edge cases that aren’t well-documented might pop up.

- Build Complexity: Qwik’s advanced optimizer does a lot under the hood. The build process (using Rollup or Vite with Qwik plugins) is more complex than for a typical React app. Qwik encourages a certain pattern (such as using useServerMount$ for code you only want on the server, etc.). It’s an adjustment from the all-client or straightforward SSR mindset.

- Performance Trade-offs in Certain Metrics: Qwik’s approach isn’t magic – if your app requires a lot of interactive features, eventually, a lot of chunks will be loaded. It excels at first load and for apps where user may not use every feature. But in a worst-case scenario where a user triggers every possible interactive component, they’ll end up downloading most of the app’s code anyway (though spread out over time). Qwik runtime itself and its client loader add a bit of JS (though quite small). So Qwik shines for interactive sites but is less necessary for pure content sites.

Popularity & Usability. Qwik’s popularity is growing in the front-end community, but it’s nowhere near mainstream yet. It often comes up in discussions about next-gen frameworks.

From a usability standpoint, Qwik’s documentation is quite comprehensive, and the team provides guides for migration and comparison. Tools like the Qwik CLI (npm create qwik@latest) make it easy to start a project. Once up and running, developers have noted that building with Qwik isn’t too bad – it’s the initial conceptual leap that’s biggest.

Qwik City adds the familiar layer of filesystem routing, so making pages and layouts feels somewhat like Next.js (aside from Qwik’s syntax in the files).

Performance. A well-built Qwik site can have an almost negligible First Contentful Paint delay (just static HTML) and nearly instant Time to Interactive because there’s no large script blocking interaction. Qwik has demonstrated sub-second interactive times on even quite complex pages in demos.

For large-scale content sites with lots of pages, Qwik’s SSG mode means the site behaves like a static site for initial navigation and then only loads what’s needed. This can drastically reduce server costs too, as many pages can be served as static files, with Qwik’s JS enabling interactive parts.

Build performance might be slower than more straightforward frameworks because Qwik does heavy lifting to optimize the output, but it’s usually worth it for production. Qwik’s approach also scales well: as an application grows, a React or Next app might suffer more from an ever-growing hydration cost, whereas Qwik will just have more lazy chunks (which, if not used, won’t hurt the initial load much). This is why Qwik proponents say it offers predictable scaling – you don’t pay a performance tax for adding features that users might not immediately use.

Qwik is a framework that flips the script on how web apps load in the browser. For teams where performance is priority #1 – say, consumer-facing sites where conversions or engagement depend on speed – Qwik deserves serious consideration. It asks you to step outside the React ecosystem but rewards you with some of the fastest web performance available in 2025.

Docusaurus: Static Site Generator Focused on Docs

Docusaurus is a bit different from the others – it’s a static site generator focused on documentation websites. Built by Facebook (Meta) and first released in 2017, Docusaurus has become a go-to tool for open source project docs and other content-heavy sites (like blogs, product docs, etc.). It uses React under the hood: a Docusaurus site is essentially a single-page React app that’s statically generated.

The key idea is to make it easy to write Markdown/MDX content and generate a polished website from it. Out of the box, Docusaurus supports versioned documentation (crucial for projects maintaining multiple release docs), internationalization (i18n), and a plugin system for customization. Content is written in Markdown (with MDX allowing you to embed React components within docs).

The theme is customizable via styling or even replacing components, and many sites use the default look (which is clean and familiar). Docusaurus also supports custom pages (so you can use it for a marketing homepage or blog section alongside docs).

Pros: Docusaurus’s focus makes it very powerful for documentation needs:

- Easy Content Creation: Writers can author pages in Markdown (or MDX for advanced use), which is far easier than writing raw HTML or complex JSX. The toolchain handles converting that to React pages. The MDX support is a killer feature – you can embed live React components in your docs for demos or interactive examples, which many project sites use (e.g., interactive code sandboxes in the docs).

- Out-of-the-Box Docs Features: Docusaurus comes with everything you typically need for a docs site: a sidebar navigation, previous/next links, version dropdown, locale dropdown, search, etc., all wired up. Localization is built-in, allowing you to translate documentation easily. Versioning is automatic.

- Maintained by Meta & Used Widely: Being supported by Meta (the React team, etc.), Docusaurus is actively maintained and kept up to date with React. In fact, Docusaurus 3.x now uses React 18/19 and supports modern conventions. There’s also a community of plugin developers and contributors making search improvements, UI tweaks, etc.

- Customization & Extensibility: Even though it’s turnkey, you’re not locked into the default appearance. Because it’s React-based, you can override any component in the theme if you need to. The pluggable nature means Docusaurus can adapt to many documentation needs while providing sane defaults. Also, deploying a Docusaurus site is easy – it’s just static files so that it can be served on GitHub Pages, Vercel, Netlify, or any static host.

Cons: Being specialized, Docusaurus has some limitations:

- Niche Purpose (Not a General App Framework): If you need functionality beyond a documentation/blog site, Docusaurus might not be suitable. You can create additional pages, but the routing and structure are geared toward docs and blogs.

- Performance of Client Bundle: Docusaurus sites are static, but the client side is a complete React app. This means that after the initial HTML loads, React hydration occurs. The JS bundle (which includes the whole app, search index [if not using an external service], and any custom components) can be sizable for extensive documentation sets, potentially a few hundred KBs or more.

- Build Times for Huge Sites: If your documentation is extensive (thousands of pages), the build time using Docusaurus could become long because it generates a React page for each. This is a typical static site generator problem.

- Limited Markdown Flavor: Docusaurus uses MDX, which is powerful, but if you have existing docs with lots of custom markdown syntax or plugins (like remark plugins), you might need to adjust them. It’s not a con, just something to adapt to Docusaurus’s markdown processing.

Popularity & Usability. Docusaurus is extremely popular in the open-source world. The usability for its intended purpose is high: one can spin up a new docs site with npx create-docusaurus@latest and have a working site with sample docs in minutes. The project structure (with a docs folder, sidebars.js config, etc.) is easy to understand. Content writers can start writing markdown without worrying about the site framework.

Performance. Docusaurus yields excellent documentation site performance, but it has some caveats. Static prerendering means users get server-rendered HTML for each docs page, which is fantastic for SEO (search engines can index easily) and ensures that if a user clicks a link from Google, they see content immediately. The initial load might include loading the JS bundle for React and the Docusaurus client, but the content is already there, so it’s not blocking the user from reading. Once hydrated, navigation between pages is speedy (no full reload, just content swapping via React router).

The overhead to be aware of is mainly the JavaScript cost – something Astro or VuePress (which have partial hydration or SSR-only content) try to reduce. Docusaurus v3 has improved by upgrading React and using more efficient techniques. For most documentation scenarios, the performance is more than adequate (especially given users often have some tolerance for a docs site to load since they expect rich features like search).

Docusaurus is the specialist of the bunch – it’s the best choice when your goal is to publish content (docs, tutorials, blogs) with minimal fuss and maximum built-in functionality.

Showdown: Comparing Top React SSGs

When evaluating these frameworks, it’s important to consider various performance aspects: core web vitals, build speed, initial load and runtime performance, and developer experience (DX). Each tool makes different trade-offs.

Here's a comparative overview of the frameworks and libraries we've covered here:

Feature | Next.js | Astro | React Router v7 | TanStack Start | Qwik | Docusaurus |

What is it? | React framework | Javascript framework | Routing library for React apps | Full-stack React framework | Javascript framework | React-based SSG |

Template Language | JSX | Astro Components (a mix of JSX, Markdown, and other templating languages) | JSX | JSX | JSX | Markdown with JSX support |

SSR, SSG, ISR, CSR | SSG with partial hydration | CSR | SSR with streaming, CSR | SSR with resumability, CSR | SSG with client-side navigation | |

Component Framework Support | React | React, Vue, Svelte, and others | React | React | React | React |

API Routes | Yes | No | No | Yes | No | Yes |

Serverless Functions (API) | Yes | No | No | Yes | No | Yes |

Code Splitting | Yes | Yes | Yes | Yes | Yes | Yes |

Content Preview | Yes | Yes | Yes | Yes | Yes | Yes |

Image Optimization | Yes | Yes | No | Yes | Yes | No |

Performance (check table below) | High | Very High | High | Very High | Extremely High | High |

SEO | Excellent | Excellent | Good | Excellent | Excellent | Excellent |

Deployment | Vercel, Netlify, AWS, others | Netlify, Vercel, others | Any platform supporting React | Any platform supporting React | Any platform supporting React | Any platform supporting React |

Learning Curve | Moderate | Moderate | Low | High | High | Low |

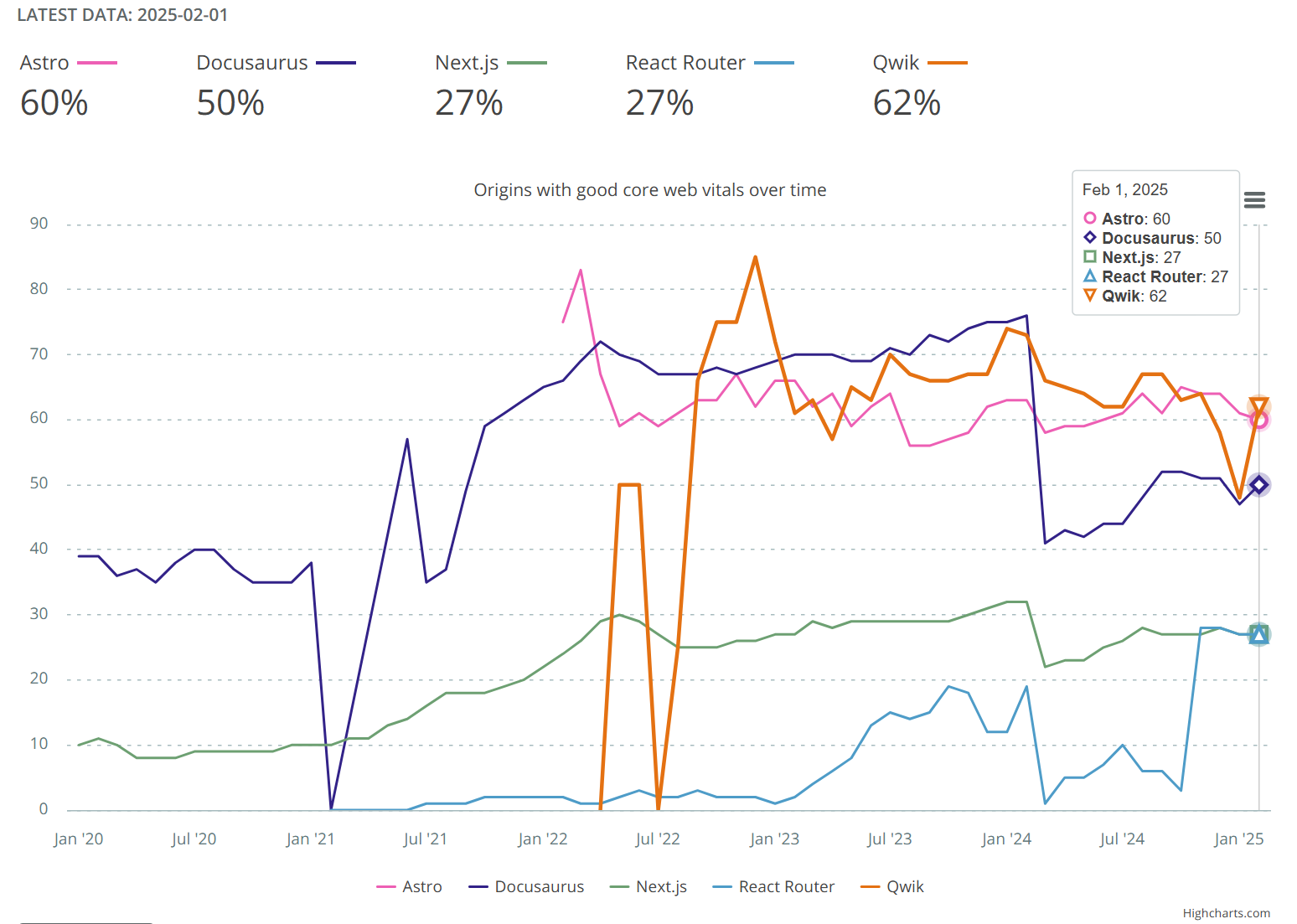

You can find more on the performance of each tool over at httparchives CWV report👇

2025 Trends and Community Expectations for React SSGs

2025 will likely not be about one framework “winning everything,” but rather each framework solidifying its domain: Next.js as the enterprise workhorse, Astro as the performance-oriented static site builder, Remix as the developer-friendly full-stack tool (especially for mid-sized apps), Qwik as the performance innovator, TanStack Start as the flexible modern stack for the adventurous, and Docusaurus as the docs specialist.

Developers have an embarrassment of riches in the React ecosystem now – far from the days when Create React App or Gatsby were the default static options. The ecosystem is moving toward faster, more efficient rendering techniques, and these frameworks exemplify that. We predict the real “winners” in 2025 will be developers and users, who will enjoy faster sites and better DX as these tools push each other to improve.

Additional reads:

JavaScript Frameworks - Heading into 2025 - https://dev.to/this-is-learning/javascript-frameworks-heading-into-2025-hkb

The State of Frontend 2024 - https://tsh.io/state-of-frontend#frameworks

JavaScript Frameworks in 2025 video - https://www.youtube.com/watch?v=TKcetuFoYU0

Performance comparison video - https://www.youtube.com/watch?v=E5amN0_1XyE

Not sure which framework to use on your next e-commerce project? Don't worry. We've got you covered.

Book a personalized one-on-one demo today, and we’ll show you what makes Crystallize a powerful commerce platform well-suited for modern frameworks (maybe even your favorite one).

Or, why not SIGN UP for FREE and start building.

You Should Also Read👇

Build Faster, Smarter, Better: The Ultimate Next.js eCommerce Boilerplate

The Crystallize open-source starter kit provides developers with a robust boilerplate to quickly build modern, scalable eCommerce storefronts using the latest front-end technologies and best practices in performance, content modeling, and user experience.

Hands-On Experience: eCommerce Store with Astro?

Astro🚀 has been a hot topic since its release in June 2022, especially because it is extremely fast. So it was a no-brainer to choose which framework we should go with next for our series of minimal eCommerce boilerplates titled ‘dounut’.

Hands-On Experience: How to Build an eCommerce Store with SvelteKit?

eCommerce storefront with SvelteKit, Houdini, Tailwind, and Crystallize. It’s easier than you think.