Event-Driven HTTP Caching for eCommerce

Let us dive into the topic typically described as the most complicated topic to learn for developers. Or better, the hardest to master.

With the rise of Jamstack and static site generators like Gatsby and NextJS, we have a new paradigm in terms of how to generate and serve your webpages - fast. When working with eCommerce, you typically have the combination of more frequent changing content, and the number of product pages can be huge.

Is the holy grail for modern eCommerce an event-driven cache strategy?🤔

Let’s dive into the nitty-gritty and see.

What Is a Fast Webpage?

There are different definitions of what is a fast webpage.

If we take a human perspective, we have the good old response time limits from Nielsen Norman Group:

“0.1 second is about the limit for having the user feel that the system is reacting instantaneously,” i.e., you should have a response time of 100ms or less for your website to feel instantaneous or simply fast.

There is a scalability concern when you have many concurrent users. You want them all to have the site feel fast. Think fast during Black Friday eCommerce peaks type of situations. Fast for all simultaneous shoppers.

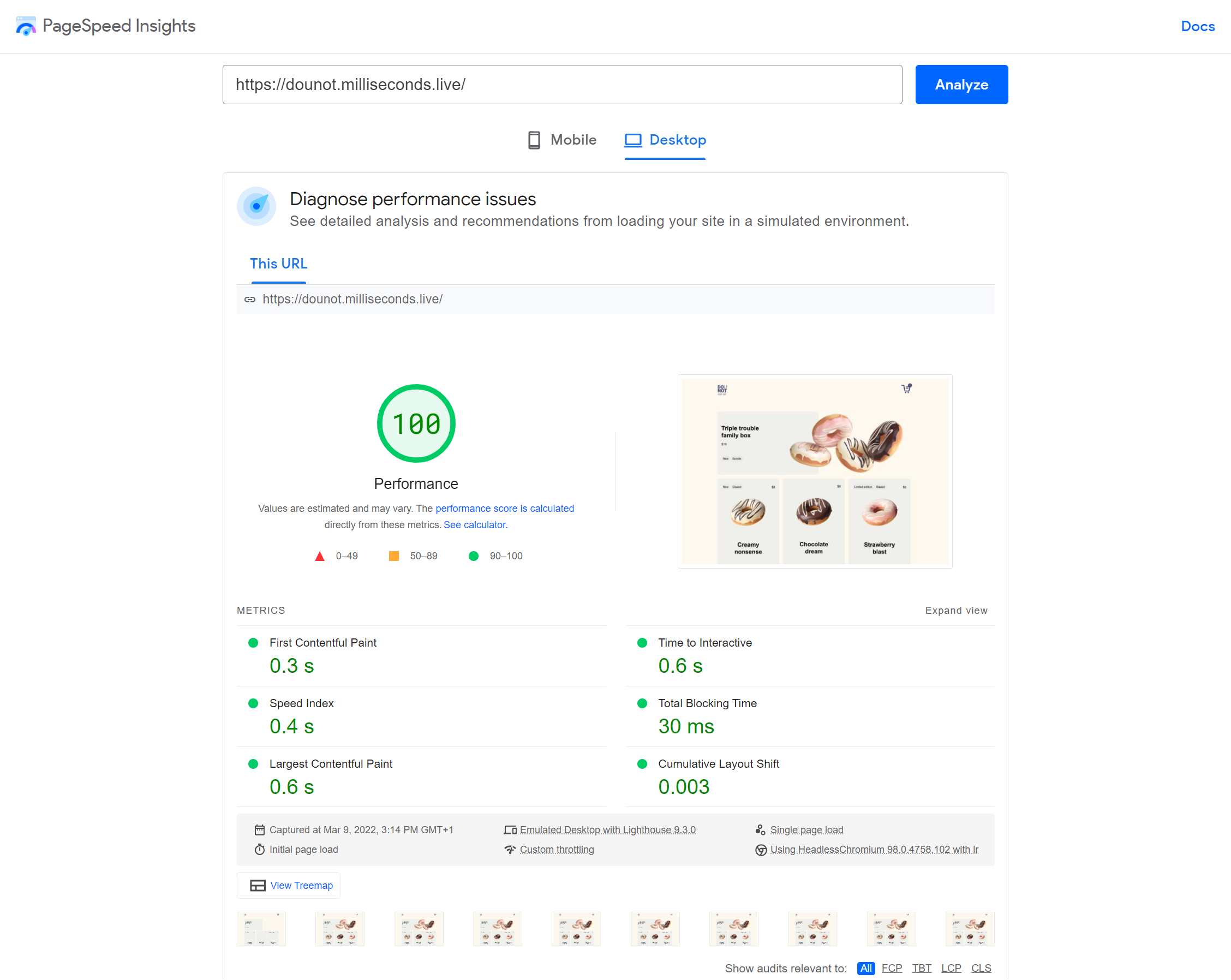

Then we have the site speed metrics measured by tools like Lighthouse, which is an objective measurement used to measure the perceived performance of your website. The result is a score between 0 and 100. You want to stay in the green here, which is 90+.

The main perspectives of a fast webpage are:

- One user: webpage rendered in a browser in 100ms or less (which is the time that feels instant for humans)

- Lots of users: webpage rendering in a browser in 100ms or less - even with high traffic (scalability)

- Measured by Google as a fast website with Core Web Vitals.

Here is how to measure your core web vitals and why it matters. As for scalability, you can read about load testing your website.

Why Would You Want to Have a Fast Webpage?

There are several reasons why you would want to have a fast webpage. In the context of eCommerce, we typically talk about these as reasons:

- Better user experience (UX)

- Better organic ranking in search results (SEO)

- You pay less for Google Ads 😱

- You get increased conversion from your visitors

- More environmentally friendly

If you want to dive into the details check the top 5 reasons why eCommerce needs to be blazing fast. Check what a score of 100 feels like with our Dounot Remix eCommerce boilerplate.

CDN Caching Is Fast

Now, let us assume that you have done your optimizations of a single webpage visitor in terms of generating the perfect core web vitals score. Now, we need to serve this page fast for every user, independent of their location.

This is where a Content Delivery Network (CDN) comes in. A CDN can be seen as a set of Reverse-Proxies dispatched on the edge of the world. The CDN will cache your webpage in multiple locations around the world and are ready at any time to deliver the cache page to your visitors in milliseconds.

A cached webpage on a CDN is fast. No matter what. But how do we get it there in a cache state? How long does it cache? How can I optimize the cache strategy for my business case?

Quick Note

This might be a good time to check our Practical Guide to Web Rendering, where we discuss everything about the most common web rendering solutions.

Static Site Generation (SSG)

Static site generation is about pre-building your webpages to static and deploying them on a CDN. Popular static site generators include Gatsby and NextJS. Static site generation involves fetching all the dynamic data from an API and rendering the results as static HTML, JavaScript, etc.

Once done, all documents are uploaded to the CDN and are instantly cached. Even for the first visitor.

In the early 2000s, this was called design & deploy and was a popular approach to pre-generate your website every time a change was done (Yes, I am saying history repeats itself).

With traditional static site generators, you have to regenerate your whole site every time the content changes in your CMS, PIM, or headless commerce service. Those regenerations are triggered by events (usually webhooks) that enable you to rebuild a fresh version on publish, update, remove etc... This might be fine, especially if your website is small and/or does not have super-dynamic content.

Server-side Rendering (SSR)

With server-side rendering, the webpage is dynamically rendered on the webserver when it gets a request. There is no build step as all pages are built on the server - on demand.

This is great for serving fresh content, but it is usually slow as the user has to wait for the build to be done on the webserver before it is served. This is traditional in the sense of how monolithic applications typically work, like a Magento webshop.

A benefit is that if some pages are never visited, well then you never build them. That is great for large websites with thousands or even millions of pages. Generating all those pages will take a long time and consume lots of server resources and energy, which translates into $$$.

Enter CDN Caching

If we now place a CDN in the middle (that is in between your web server and the visitors' browser) then we can cache the page and make it fast.

That is; we can make it fast for the second page that visits that page. As the first visitor has to wait for the page to be generated by the webserver before it reaches the CDN and serves it to the user.

So, the second visitor to a page will get a super-fast page served. It is cached, and the webserver is not asked to dynamically generate the page. Meaning no server time, no API calls, no database requests, cheaper.

CDN is a vital part of the cache strategy for both static generated sites and server-side generated sites. The pages are cached and kept fast.

But how do you control the cache?

There are 2 models when it comes to caching:

- Expiration model

- Invalidation model

Invalidation model is based on Etag or Last-Modified and involves the 304 Not Modified response. While it has its use cases, it requires validation (to confirm that nothing has changed) from the origin (or a server in the middle) to use a cached version.

Using CDN we don’t want extra requests to get that validation and therefore the expiration model is used, especially the Cache-Control header in the responses.

There are three key parts of cache control you should know about:

- max-age: how many seconds the page can be cached. This tells the browser how long to cache the page. It also tells the CDN for how long it should cache the page (if no s-max-age).

- s-maxage: Stands for shared max-age, how many seconds the page is cached in the CDN. If you define this it will override max-age for the CDN cache. So you can e.g. have 1 seconds cache in the browser and 1 day in the CDN.

- stale-while-revalidate: If s-max-age is reached on the CDN a stale version of the page will be served while a fresh version is generated from the origin and cached. Unless the number of max seconds to serve a stale cache is reached.

Feel free to nerd out on HTTP Cache-Control further.

Incremental Static Regeneration (ISR)

The strategy with incremental static regeneration is to do an initial full (or partial) build of all your web pages and store static versions on the CDN. Then whenever a page is requested the static version is served while a fresh cache is generated in the background.

You have an initial big build. Then everyone gets fast and fresh responses.

All documents are pre-rendered. Pretty fresh, but maybe just a little bit off. You can typically define how long a cached version should be kept on the CDN before a new version is freshly generated - often as low as 1 second by default.

This is similar to the stale while revalidating in CDNs, but it is happening on your server. Well, nowadays this is typically happening in some sort of edge function.

The method is similar to what you would do when e.g. indexing your products in a search service like Algolia. Initial big build (index all), then whenever a product is updated you update the cache for that version. But search indexing is typically based on events, updates when content changes. Ok, hold that thought.

Stale While Revalidate

Stale while revalidate is a kind of the HTTP Cache-Control standard equivalent of incremental static regeneration. When max-age is up, the CDN goes to the origin server. But while stale while revalidate the CDN serves the current “stale” cached version. Then the CDN goes to the origin and rebuilds a fresh cached version.

Even with multiple visitors while the max-age is up they get the stale version, but fast fast fast.

You only build what is visited. This is great, especially if you have a large store. What if you have 5 million products? Then regenerating all pages is not really an option.

Incremental static regeneration and stale while revalidate is pretty much the same. For the user, it is the same, with exception of that initial built version with static revalidate. While the stale while revalidate you get a “slow” initial request as the page is generated on the fly.

A webserver is essentially an incremental site generator. A request comes in and the server generates that page - dynamically. Independent of the webserver technology; PHP, .NET, React SSR or Remix 👀

Stale-while-revalidate is defined in the number of seconds the stale version will be served while revalidating the origin. Page is rebuilt on CDN in the background while the stale version is served. Unless the Time to live (TTL): (s-)max-age + stale-while-revalidate) has been reached, then the page will be generated anyways and served fresh.

Enter Cache Hit Ratio

Cache hit ratio becomes interesting when comparing static site generation with pure HTTP Cache with stale while revalidate. The cache hit ratio is a number that defines the percentage of pages that are served from the CDN vs generated from the origin.

( 1 - Origin pages served / CDN pages served ) * 100 = Cache Hit Ratio % You want the cache hit ratio to be as close to 100 as possible. In high traffic scenarios, I have easily seen a cache hit ratio with a traditional HTTP cache of 99%+ meaning for every cached page served less than 1 paged is served from the origin.

Frameworks like NextJS have a pre-fetching strategy when serving pages from the edge. What this means is that when a visitor gets a page (cached) NextJS does a prefetch of x number of likely clickable links. A number 8 additional pre-fetching URLs is not uncommon. Ok, for the user this is lightning fast - but it generates 8! origin requests for a single URL.

Now, this can be tuned with TTL for NextJS, but with the initial build and the greedy prefetching you can actually end up with a negative cache hit ratio. This is not super good for the $$$ or the 🌿.

We have actually seen scenarios with MORE origin requests than the cached pages served with NextJS. Usually, this is no problem if you have a medium-sized site and your backend has a fast API.

How often do you want to re-build? Generating a page is expensive. Serving cached data is cheap. So you should keep an eye on your cache hit ratio. Generating the page is also energy expensive, so a high cache hit ratio is cheaper and greener. Less server time, fewer API calls, less energy. Less $.

Enter Event-Driven Caching

We have looked at two different approaches for serving fast cached pages. They both have a similar approach for expiring the cache using TTL and serving stale pages while a new fresh dynamic page is generated in the background.

There can be a big difference between the two when you look at the actual cache hit ratio if you are not careful. Make sure you are on top of requests from the CDN vs the origin.

With the recent release of the revalidate on-demand in NextJS, we can change up the game for static generated sites. We can trigger a revalidation of a page based on a webhook or event. This is a big change as you can now use Long TTL:

- Cache pages longer on the CDN (still want a reasonably short max-age for the browser)

- Trigger a revalidation when content changes

This strategy can dramatically reduce your cache hit ratio.

If you are using frameworks like Remix you have (perhaps) even more control over your cache headers and can rely on the stale while revalidate in your CDN such as with Cloudflare.

Many CDNs have supported HTTP Cache Purge based on events for some time. This purging is at this point also more fine-grained and you can control the purging of single, multiple URLs, tagged pages, etc.

With Remix, for example, you could configure your HTTP Cache something in the lines of

Max-age: 1 second

S-maxage: 1 week

Stale-while-revalidate: 1 monthThis would cache the page in the web browser for 1 second before checking for a fresh page on the CDN. The CDN will cache the page for 1 week. After 1 week and up to 1 month, the stale version of the page will be served (while a fresh page will be generated in the background).

Then the kicker: based on events triggered by the CMS, PIM, or headless commerce service you can also trigger the stale while revalidate. Meaning you generate a fresh page based on an event from the source of the data. Ensuring a fresh version of the page will be served when the content changes.

This is super nice for larger webshops with lots of products with also frequent updates of prices, stock, and product highlights on landing pages.